Graduate School

“Stuck. What it is, I think there is a jump some people have to make, sometimes, and if they don’t do it, then they’re stuck good … And Rudy never did it.”

“Like my father wanting to get me out of Maas? Is that a jump?”

“No. Some jumps you have to decide on for yourself. Just figure there’s something better waiting for you somewhere….” He paused, feeling suddenly ridiculous, and bit into the sandwich.

After spending a fair amount of time in industry, I’ve decided its fair time to change directions, to make a jump. This month I’ll be returning to school to work on my master’s degree in Computer Science. Based on what makes me happy, and where I’d like to take my career, it’s the right path and the right time to make this change. I’m looking forward to making the most of it.

As far as what it means for the site, it’s tough to say. August 8th was the six year anniversary of the site and after writing and publishing my interests for so long, it makes sense to continue to do so, but on the same hand, time is precious. So we’ll see. As always, take a look through the archive, follow by email, or by RSS for the latest happenings.

3D Printed Toy Robot

|

Introduction

At the beginning of the year I decided to change my approach to working on personal projects; in short, more time on quality and less on quantity. With that mindset I thought about my interests and how they could come together to form an interdisciplinary project that would be ambitious, but doable. After much thought, I zeroed in on mechatronics -the interplay of form, computation, electronics and mechanics- and began to explore how I could draw from these disciplines to form the foundation of my next project.

Like most people coming from a software background, much of what I work on is abstract in nature. Every once in a while I have a strong desire to work on something tangible. Lacking the proper space and tools to work with traditional mediums like wood and metal, I thought about newer mediums I’d read about and directed my attention to 3D printing. Despite being 30 years old, the technology has only become accessible to consumers within the past seven years with the launch of several online services and open source printers. Having watched this growth, I was curious about the technology and decided it would be worth exploring in this project.

I’d been looking for an excuse to get back into electronics and work on a project that would require me to delve deeper into analog circuit design. This desire was drawn from the belief that I ought to be able to reason about computation regardless of how it is represented- be it by mathematics, software or hardware. This of course meant avoiding microcontrollers; my goal here was to better learn the foundations of the discipline, not make something quickly and cheaply.

To stay true to the mechatronics concept, I decided I would incorporate mechanical elements in to the project. Having zero knowledge of mechanics, I knew whatever I’d make would be simplistic and not much to write about. However, I felt that whatever I decided to do, I wanted the mechanically driven functionality to ignite a sense of fun in whoever was interacting with the end result. After all, the end result is something you might only interact with briefly, but it should be memorable and what better way to achieve that than to make it entertaining.

I searched for inspiration to figure out how I would tie all of these disciplines together. Growing up I read a lot of Sci-fi literature and a lot of that material stuck with me over the years, especially the portrayal of robots from the golden age. Above all, it captured the sense that anything was possible with technology and served as a great source of inspiration in my career. So without much surprise, I found the inspiration I needed for the project in my robot avatar. My mind was flickering with ideas as to how I could bring that simple icon to life and with that excitement I began my work.

Over the course of eight months I taught myself the necessary disciplines and skills to carry out my vision of building a simple 3D printed toy robot. Having finished my work at the end of July, I began compiling my notes and documenting my work and concluded this writing in the middle of October. This post is the culmination of that work and it will cover my technical work, thinking and experience. Above all, this is my story of bringing an idea to fruition while digging into the world of traditional engineering.

Outline

The scope of the project is fairly broad, so naturally, there’s a lot that I’m going to cover in this post. To help set some expectations on what you’ll encounter, I’m going to cover the three main stages of the project consisting of planning, building and evaluating; each covering the technical aspects related to industrial design, electronics and mechanics. In the planning section you can expect to read about the requirements, project plan and design of the product. In the building section, I’m going to cover the process of sourcing materials, prototyping and building the finished product. I’ll be wrapping up with a section dedicated to how the product was tested and some reflections on how I would approach the project again given the experience.

Related Work

While I was in the midst of doing some brainstorming last December I came across a series of YouTube videos by Jaimie Mantzel covering his experience building 3D printed toy hexapods. His “3D printed big robot” series really captured his enthusiasm and energy for the medium and while I wasn’t going to be making something as complicated as a hexapod, it was helpful to get a window into someone else’s mind and see how they approach the problem of making a product from scratch. Hopefully this document will inspire others to get out there and make something as much as these videos inspired me to do the same.

Planning Phase

Requirements

I designed my avatar about ten years ago and in reflecting on how I illustrated it at the time I began to think about what it would do in the real world. For starters, I envisioned an illuminated eye and chest opening with the later glowing on and off. The second thing I envisioned was that the arms would be able to swing back and forth opposite to one another giving the illusion of body language depending on the initial position of the arms: straight down giving the impression of marching, forward as though it were in a zombie state, all the way up as though in a panic and flung backward as though it were running around frantically. In short a variety of different personas.

Implicit to these behaviors are some underlying requirements. The toy requires power, so it makes sense to provide an on-off button somewhere on the design that is easy at access while the arms are in motion. Since the arms are in motion, it’s begs the question of for how long are the arms swinging in each direction and how quickly. Finally there is the frequency at which the chest lights pulsate on and off and with what type of waveform. The physical enclosure would be required to enclose all of the electronics and hardware components and also provide a means of assembling the parts within the enclosure and then providing a mechanism for securely closing the enclosure.

Since we are talking about engineering it makes sense to have a handful of engineering centric requirements. From an electronics engineering point of view, the components must be within their stated current and voltage tolerances as the power supply diminishes over time. From a mechanical point of view, the motor must supply sufficient torque to the mechanical system to ensure efficient transfer of motion to the arms so that the movement remains fluid, plus the product would need to be stable under static and dynamic loads. Finally, from an industrial design point of view, the product itself must be sturdy enough to withstand the forces that will be applied to it and be designed in a way that the fabrication process will yield a quality result.

Ultimately, we are talking about making a consumer product. That product needs to be polished in its appearance and stay true to the original avatar illustration. The product needs to be conscious of its life cycle as well in terms of being made from environmentally friendly components and practices, to being efficient in its use of energy and being capable of being recycled at the end of its life. As is the case with any project, there are a number of more nuanced requirements imposed by each of the components and tools used in the process of creating the product. I will discuss along the way how these constraints affected the project.

Methodology

Thinking about how to approach the project, I thought about how I’d approached projects of similar size and scope. My approach typically follows the iterative and incremental development methodology; do a lot of up front planning, cycle through some iterations and wrap up with a deployment. Rinse and repeat.

|

In the introduction I talked about my thought process and motivation that constituted the brainstorming that was done to generate the requirements. From these requirements, I broke the problem down into smaller more digestible components that I could research and learn the governing models that dictate how those components behave from a mathematical point of view and on the computer through simulations. With that understanding, it was possible to put together a design that fulfills a specific set of conditions that result in the desired set of outputs. This design is the cornerstone of the initial planning.

The bulk of work is based in working through iterations that ultimately result in working components based on the design that was produced in the planning stage. Each iteration corresponds to a single component/requirement that starts off with building a prototype. For electronics this is done by prototyping on a breadboard, mechanics and industrial design with cardboard elements. The result is a functioning prototype that is used to create a refined design for the component that is then built. For example, a soldered protoboard or 3D printed volume. A completed component is tested and if accepted, stored for assembly.

As accepted components are completed, they are assembled into the broader view of the product. Each component is tested as part of the whole and if working correctly, fastened into place. Once all of the components are incorporated, the end product is tested and ultimately accepted. Throughout the process, if a particular output for an activity fails, then the previous activity is revisited until the root cause is identified, corrected and the process moves forward to completion.

Design

Industrial Design

Part Design

Knowing I didn’t know what I didn’t know about how to make a durable product, I did some research about part design and came across a set of design guidelines from Bayer Material Sciences covering the type of features that are added to products to make them more resilient to the kind of abuse consumer products are subjected to. This was helpful since it gave me a set of primitives to work with and in this project I ended up using a few of these features for providing strength to the product- ribs, gussets and trusses- and a few for allowing parts to be mated together- counterbores, taps and bosses.

|

Ribs are used whenever there is a need to reduce the thickness of a plate and retain the plate’s original strength. When the thickness of the plate is reduced, so is the volume, and hence the cost but so too is the plate’s strength. To compensate, a rib is placed along the center of the plate and this increases the plate’s strength to its original state. A similar approach is deployed when talking about gussets as a way of providing added strength between two orthogonal faces. While not depicted above, the last technique deployed were trusses. Like what you’d find in the frames of your roof, the truss is a series of slats that form a triangular mesh that redistribute forces placed on the frame allowing it to take on greater load.

Counterbores are used so that the top of a machine screw sits flush or below the surface that needs to be fastened to another. The machine screws are then fastened to either a tap or a boss. A tap is a bored out section of material that is then threaded to act like a nut. Since we want to minimize material, the alternative is to carve out the material around the bore and leave a standing post that the machine screw will fasten to. Since the boss is freestanding, it may have gussets placed around its perimeter depending on the boss’s height.

Modeling

The first step in getting the 3D model put together was to use the pixel art version of my avatar to create a vectorized version for the purpose of developing a system of units and measurements that I could then use to develop a 3D model of the product in OpenSCAD. After having a chance to learn the software and its limitations, I set out to create a series of conventions to make it easy to work with parts, assemblies, enclosures in a variety of units and scales. Since real world elements would factor into the design of the product, I included all of the miscellaneous parts into the CAD model as well to make it easier to reason about the end result. After about a month of work the model was completed, let’s take a look at how it all came together.

The product consists of two arms, body, bracket and back plate. Each arm consists of a rounded shoulder with openings for fastening shaft collars; the shaft collars themselves and the shaft that extends from the body. The extended arm is hollow with interior ribs to add strength to the printed result. To support the printing process, small holes are added on the underside so that supporting material can be blown out.

On the exterior face of the back plate, the embossed designer logo, copyright and production number are provided to identify the product. Counterbores allow machines screws to be fastened to the main body at the base of legs and back of the head. On the interior face, the electronic components are fastened to bosses using machine screws. Since the back plate is just a thin plate, ribs were added to increase that plate’s strength.

The body has an opening on the top of the head for the on-off switch, and the face an opening for the eye plate and on the chest an opening for the chest plate. Counterbores line the hips so that machine screws can be fastened to the bracket to hold it in place. On the interior, horizontal bosses mate with counterbores on the back plate and several trusses and ribs were added to provide strength.

|

A shelf separates the eye cavity from the chest cavity so that illumination from one doesn’t interfere with the other. Under the interior of the shoulders are the RIC for the material, WEEE logo, and along the leg, text describing the product with title, designer, date and copyright.

Finally, the bracket consists of two flanges with openings for ball bearings and a main shelf with an opening for the motor and set screws to secure the motor. The bracket has four taps that mate with the counterbores along the hip of the body.

Analysis

While I had a set of guidelines on how to make the product durable, most of the modeling process was more art than engineering. Since the 3D printing process would be fairly expensive, I wanted some additional confidence that the result was going to come out sturdy, so I thought a bit about it from a physics point of view. I knew of stress-strain analysis and that to understand how a complex object responds to loads I would need to use a Finite Element Analysis (FEA) solver on the 3D model. I researched the subject a bit, and once I felt I had a working understanding, I decided to use CalculiX to perform the actual computations. This section will cover the background, process and results at a very high level.

Stress-Strain Analysis

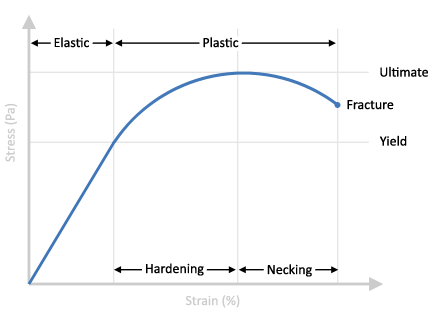

When a physical body is put under load, the particles of the body exert forces upon one another; the extent of these internal forces is quantified by stress. When the load begins to increase beyond the material’s ability to cope, the particles may begin to displace; how much they move about is quantified by strain.

|

For small amounts of strain the material will behave elastically; returning to its original form once any applied forces are removed. As the amount of strain increases, and consequently the stress past the yield stress, the material begins to behave plastically; first hardening and then beginning to neck (i.e., thinning and separating) until it finally fractures. The specific critical values will depend entirely on the material and in this project an isotropic material, Polyamide PA 2200 (Nylon 12), will be used in the 3D printing process.

For a durable product, we obviously want to avoid subjecting the body to any forces that will result in permanent deformation which means keeping stress below the yield stress and even lower from an engineering point of view. The acceptable upper bound is given by a safety factor that linearly reduces the yield stress to an acceptable level. According to the literature a safety factor in the range is appropriate.

To establish a design criteria, I went with a safety factor of . The material datasheet, omitted the precise yield value, but upon further research, the average yield stress for Nylon 12 was reported to be

. Thus, an upper bound of

will be used as an acceptable level of stress- which is on the order of about a five hundred pound object resting on a square centimeter of area. While that may make the idea of performing the full analysis overkill, it’s still valuable from an intuition building point of view.

Linear Stress-Strain Relationship

Given that we want to stay within the linear region, we can now begin to look at the specific relationship between stress, , and strain,

, for a three dimensional point which is given by a system of partial differential equations subject to equilibrium and compatibility conditions, fixed (Dirichlet) and load bearing (Neumann) boundary conditions:

Both stress and strain are second-order tensors that relate how each of the three bases of a coordinate system relates to one another. When acting in the same dimension, the result is normal stress, , and strain,

, otherwise the result is shear stress,

, and (engineering) shear strain,

. The strain terms are partial derivatives of the displacement field,

, to be determined and stress terms are constants.

is the elasticity matrix that relates the two.

Since we are working with an isotropic material, the resulting equations can be simplified to the following:

| Characteristic | Description | Polyamide 2200 Values |

|---|---|---|

| Young’s modulus | Slope of the stress-strain curve in the linear region. | |

| Poisson’s ratio | Measure of how much a material will reduce as a consequence of being stretched. | |

| Shear modulus | Function of the previous two and quantifies how force is needed before the material begins to shear. |

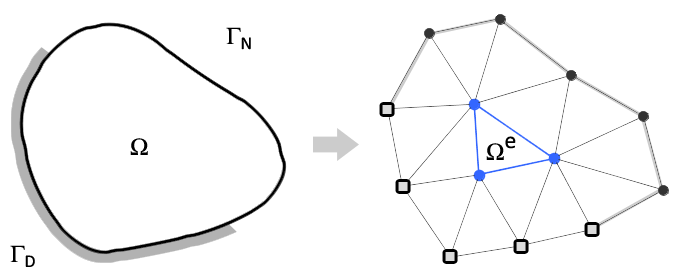

Finite Element Method (FEM)

To determine the stress and strain of a body under load we’ll rely on the FEM, a general purpose numerical method for calculating the approximate solution to a boundary value problem whose analytic solution is elusive. The general idea is that the problem domain can be broken into smaller elements, each analyzed individually, and the corresponding analyses combined so that an overarching solution can be determined.

|

In the discretization step, the exact elements depend on the nature of the problem being solved, and in the case of stress analysis, into (linear or quadratic) tetrahedral elements consisting of nodes, edges and faces. In general, the nodes are being subjected to (unknown) internal and (known) external forces and the displacements of the nodes are to be determined. The relationship between the two is assumed to be linear. Thus, the following equation characterizes the state of the physical model at the element.

Where is the stiffness matrix,

is the displacement vector and

is the force vector (also known as the load vector). Fixed boundary conditions are defined in the displacement vector and load boundary conditions in the force vector when necessary. After defining the relationship at each node, the assembly process aggregates each statement into the global stiffness matrix which represents the state of the full physical model.

Standard algorithms such as Gaussian Elimination and the Gauss-Seidel Method can be used to solve for the displacement vector. With the displacement vector, stress and strain can then be computed at the nodes and interpolated across each element to produce the desired stress analysis. As the number elements increases, the error between the exact and approximate solution will decrease. This means that the FEM solution serves as lower bound to the actual solution.

Tool Chain

|

Given the theory, it’s time to assemble the tools to carry out the job. The abundance of free computer aided design (CAD) and engineering (CEA) software made it easy to narrow down the options to OpenSCAD to define the geometry, MeshLab to clean the geometry, Netgen to mesh and define boundary conditions, and finally CalculiX to perform the necessary calculations and visualize the analysis. While each tool is excellent at carrying out their technical tasks, they are less polished from a usability point of view so additional time was spent digging under the hood to deduce expected behavior. Given the extra time involved, it’s worth covering the steps taken to obtain the end results.

The geometry of the product was modeled in OpenSCAD using its built-in scripting language. To share the geometry with Netgen, it is exported as a Standard Tessellation Language (STL) file which consists of a lattice of triangular faces and their corresponding normal vectors. Sometimes I found the exported STL file from OpenSCAD would cause Netgen to have a hard time interpreting the results, so MeshLab was used to pre-process the STL file and then hand it off to Netgen.

Netgen is used to load the geometry, generate the mesh and define boundary conditions. Once the geometry is loaded and verified with the built-in STL doctor, the engine is configured to generate a mesh and the process (based on the Delaunay algorithm) is carried out. Once the resulting mesh is available, the faces that correspond to the boundary conditions are assigned identifiers so they can be easily identified in CalculiX. The end result is exported a neutral volume file that CalculiX will be able to work with.

CalculiX consists of two components: GraphiX (CGX) and CrunchiX (CXX). CGX is used as a pre-processor to export the mesh in a format (MSH) that CCX can easily interpret, and specify and export the boundary conditions consisting of the fixed surfaces (NAM) and load conditions (DLO). CCX takes a hand written INP file that relates the material properties, mesh, and boundary conditions to the type of analysis to perform and then CCX carries out that analysis and outputs a series of files, most notably, the FRD file. CGX is then used as a post-processor to visualize the resulting stress and deformation results.

Finite Element Analysis (FEA)

The completed product will have pressure applied to the top of the head whenever the pushbutton is pushed to turn the product on or off; based on the design criteria, a uniform static pressure of will be applied across the top of the head for the load boundary condition. It will be assumed that the product is sitting on a surface such that the bases of the feet are fixed producing the remaining boundary conditions.

Six configurations were run in order to evaluate the designs consisting of three mesh granularities against the model before and after strengthening enhancements were introduced. For the purpose of the analysis, it is assumed that the eye, chest and back plate are fixed to the body.

|

Looking at the plots, the maximum displacement is centered about the through hole for the pushbutton which is consistent with one’s intuition. It appears that stress is most concentrated along the front where the head meets the shoulders and along the rim of the through hole for the pushbutton which matches up with the literature. After enhancements were introduced, it appears that displacement was reduced and that stress became more concentrated. Quantitatively:

| (Enhancement) | (Mesh Size) | Stress (MPa) | Displacement (mm) | ||

|---|---|---|---|---|---|

| Min | Max | Min | Max | ||

| Before | Moderate | 0.0166 | 1.27 | 0 | 0.519 |

| Fine | 0.0109 | 1.83 | 0 | 0.888 | |

| Very Fine | 0.00151 | 5.99 | 0 | 2.01 | |

| After | Moderate | 0.000648 | 2.5 | 0 | 0.631 |

| Fine | 0.000348 | 5.06 | 0 | 1.11 | |

| Very Fine | 0.000148 | 11.2 | 0 | 1.75 | |

After collecting the extremum from the test cases, the stress results seemed spurious to me. It didn’t feel intuitively right that applying a large amount of pressure to the head would result in at most a half of that pressure appearing throughout the body. I am assuming that there was a mix-up in the order magnitude of the units somewhere along the way. If the calculations are correct, then everything will be well below the yield stress and the product will be able to support what seems like a lot of stress. On the other hand, if the numbers are wrong, then I can’t make any claims other than relative improvements in the before and after; which was the main reason for carrying out the analysis.

From the literature, a finer mesh (i.e., a greater number of nodes) results in more accurate results. Looking at the outcome of the “Very Fine” mesh shows that the maximum stress in the product after enhancements is greater than the maximum stress before changes. However, the product is more rigid after the enhancement and admitted a reduced maximum displacement. This seems like an acceptable tradeoff since the goal was to make a product sturdier through enhancements.

Mechanics

Construction

|

The next step in completing the design of the product was to focus on how to make a machine to rotate the arms. Not knowing a whole lot, the first step was to read up on the established elements and to think how they could be used to fulfill my needs. Out of this reading I came across the differential and how it was configured to rotate shafts at different speeds. Deconstructing the mechanism down to its primitive components enabled me to see how I could borrow from that design to come up with my own.

Gearmotor

At the heart of the mechanical system is a 100:1 (gear ratio) gearmotor consisting of a direct current (DC) motor and a gear train. For every rotation of the output shaft, the motor’s shaft rotates 100 times. This is achieved through the arrangement of gears in the gear train attached to the DC motor’s shaft. Since torque is proportional to the gear ratio, this means we get greater mechanical advantage.

Gear Train

The gearmotor is used to drive a gear train consisting of three gears: a pinion gear attached to the shaft of the gearmotor and two bevel gears that are perpendicular to the pinion. As the pinion gear rotates clockwise (resp. counterclockwise), one of the bevel gears will rotate clockwise (resp. counterclockwise) and the other will rotate counterclockwise (resp. clockwise). The pinion gear and bevel gears that will be used are in ratio of 1.3:1.

Shaft Assembly

To fix elements to the shaft, shaft collars are used to pinch the element into place along the shaft and each shaft collar is then fastened to the shaft using screw sets to retain the position and inward pressure on the element. Each bevel gear is connected to a shaft that extends outward to an arm. The main elements fastened using the sandwiching technique are the bevel gears, radial ball bearings that mesh with the bracket and body, and the arm. Outside the body, a spacer is used to displace the arm from the body and openings exist in the shoulder of the arm to access the shaft collars used to secure the arm.

Bracket

A modified clevis bracket will be used to hold the mechanical elements in place. The bracket consists of an opening in the middle to house the gearmotor along with two set screws openings to fasten it in place. To allow the bracket to be fitted with the body, machine screws are tapped along the edges of the bracket. Along the flanges of the bracket are openings for the radial ball bearings and each flange are supported by gussets to ensure that they don’t easily break off.

Analysis

Since we want to control the output of the arm as much as possible, we’ll want to explore how the arms behave at different orientations. To do so, we’ll assume that no external forces (with the exception of gravity) are acting on the arm and that the motor we are using a permanent magnet, direct current motor with a gearbox, specifically the KM-12FN20-100-06120 from the Shenzhen Kinmore Motor Co., Ltd. There are three views of the model that we’ll take into account: the arm, and the motor’s physical and electrical characteristics. Each view of the model will be presented, and then used to answer two questions (1) are there any orientations of the arm that cannot be supported by the motor and (2) are there any orientations that the motor cannot accelerate up to in order to achieve a steady angular velocity.

Arm

|

While the geometry of the arm is more complex than a standard geometric primitive, it will be modeled as a cuboid with square edge length of, and projected length

. The wall thickness,

, gives a total approximate volume of

. According to the material’s datasheet, the density of the printed material is

giving us an approximate mass of

.

Since we are talking about rotating the arm, the moment of inertia will come into play. We’ll use the standard formula for a cuboid, superposition principle to account for the hollow interior and parallel axis theorem to deal with the pivot about the elbow in order to come up with the actual moment of inertia for the model, .

Physics

We have a series of torques being applied to the arm: first from the motor itself, , the viscous friction,

, and finally from gravity,

.

The torque from the motor, , is proportional to the current,

, that is applied with respect to the motor’s torque constant,

. The torque constant can be calculated by taking the quotient of the stall torque and current. For the motor that will be used, that values comes out to be

.

The viscous friction, , is proportional to the speed at which the motor is rotating and the motor’s viscous friction constant,

. The datasheet doesn’t include this value, so a near zero value was chosen based on properties of similar motors.

Torque due to gravity, , is a function of the force due to gravity,

and the orientation of the arm,

. Assuming that gravity and friction are acting in opposition of the motor, the net torque is the sum of these torques giving:

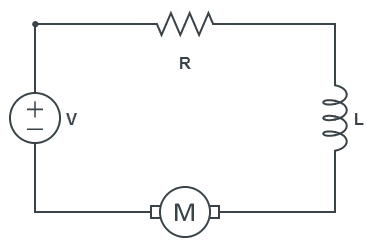

Electronics

The ideal electronic model of the motor system is a series circuit consisting of the voltage, , applied to the terminals of the motor, the motor’s internal resistance,

, due to the coils, resulting inductance,

, and the back electromagnetic field,

, generated by the motor.

|

By Kirchhoff’s voltage law, the voltage applied to the terminals is equal to the sum of the potentials across each of the components giving:

The three constants in the equation, are all unknown with the exception of the motor voltage constant,

, which is just the same as the motor torque constant

.

Since the resistance and inductance are not listed on the datasheet for the motor and could not be located online, we’d have to purchase the motor and then experimental determine their values. To complete the analysis, we’ll make simplify assumptions to work around this limitation.

Completed Model

Rewriting the physical and electronic governing equations in terms of the angular velocity, , we end up with a system of inhomogeneous linear ordinary differential equations which can be solved using the technique of variation of parameters.

In doing so, we’d uncover nonlinear transient behavior and steady state fixed values that both current and angular velocity will approach in the limiting case.

Now that we have a complete model, we’ll make some simplifying assumptions in order to resolve questions (1) and (2). First, we’ll assume that the viscous friction constant is several orders magnitude smaller than the other terms and can be set to zero. Second, we’ll assume that the motor has accelerated up to a fixed angular velocity. Finally, we’ll assume the motor will always be supporting the worst case load from the arm. Under those assumptions we arrive at:

To approach question (2), let’s think about the current side of the system. The maximum current takes place when the motor shaft is not rotating. Using an ohmmeter to determine the terminal resistance, , the maximum current works out to be

which is higher than the rated current of

but less than the stall current of

. From a steady state point of view the motor will operate within the specified bounds.

However, when we go to reverse the motor, we’ll introduce a drop from the steady state speed down to zero and then ramp back up in the opposite direction. So in reality, we may observe current changes on the order of twice that of which will push us outside the stated boundaries so we’ll need to ensure that we operate a voltage no higher than

. Provided we keep the voltage below

the rated voltage of

, sufficient current will be supplied to motor and it will rotate up to a constant angular velocity and thus, obtain any desired orientation.

Electronics

The final step in completing the design was to focus on how the electronics would illuminate the eye and chest cavity and drive the rotation of the arms. Being the main focus of the project, I will be covering how the electronics fulfill these requirements in a bit more depth than the two prior sections.

Motor Control

The motor control subsystem is responsible for managing how frequently and how quickly the motor needs to rotate in each direction. The subsystem consists of a timing circuit determining how frequently the motor will rotate, a pulse width modulated circuit to determine how quickly, a Boolean logic circuit to form composite signals that will be feed to a motor driver, and an H-Bridge circuit serving as the motor driver used to drive the gearmotor.

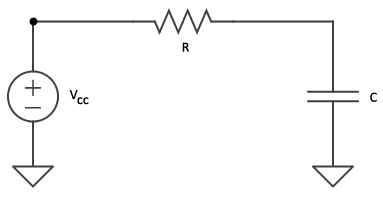

Series Resistor-Capacitor (RC) Circuits

To understand the basis for timing we’ll need to discuss series resistor-capacitor circuits briefly. Assume a series circuit consisting of a voltage source, (Volts), resistor with resistance

(Ohms), and a capacitor with capacitance

(Farads). There are two scenarios to consider, the first in which the capacitor is fully discharged and second when it is fully charged.

In the first, the capacitor will begin to charge allowing some of the current to pass through and then, once fully charged, it have such impedance that no current will flow through. Kirchhoff’s voltage law says that the voltage across the resistor and capacitor is equal to the supply voltage.

Since there is one path for current to flow through the entire circuit, the amount of current flowing through the resistor is the same as the capacitor. Using Ohm’s law and the definition of capacitance and current we arrive at an initial value problem consisting of a linear ordinary differential equation that can be solved using the technique of integrating factors.

Solving for equation we get a curve that grows with exponential decay and in the limiting case, approaches . For mathematical simplicity, let

be the timing constant.

In the case of the discharging capacitor, Kirchhoff’s voltage law says the voltage of the capacitor is equal in magnitude to the voltage across the resistor.

Making the same assumptions as before, we arrive at another initial value problem consisting of a linear ordinary differential equation that can be solved using its characteristic equation.

As a result we get a curve that declines with exponential decay that will eventually approach zero in the limiting case. Using the timing constant again, we arrive at the following curve.

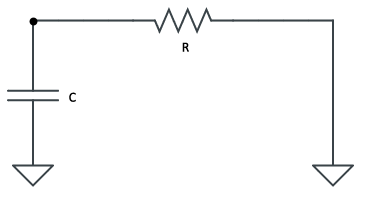

Timing

|

In order to tell the motor how long it needs to be on in one direction, a 555 integrated circuit is used to generate a square waveform of a fixed frequency. This is done by chaining a RC circuit with the inputs of the integrated circuit in astable mode to generate a 50% duty cycle waveform. The duty cycle is a way of measuring what percent the resulting square wave will spend in a high state relative to the period of the waveform. Here, we’ll have equal parts high state and low state each period.

|

When an input voltage of one third that of the reference is applied to the trigger input, the output of the integrated circuit will be the same as the reference voltage. When two thirds the reference voltage is applied to the threshold input, the output voltage goes to ground. In this set up, the capacitor in the circuit will be continuously charging until the ceiling has been hit and then discharge until the floor and so on.

| Characteristic | Time (seconds) |

|---|---|

| Initial ramp up time | |

| Discharge times | |

| Subsequent charge times | |

| Period | |

| Frequency | |

| Duty cycle |

To calculate the values for , I wrote a program to explore the combinations of standard resistor and capacitor values, then narrowed it down to those combinations that would give nearly the desired duty cycle and a period of about a few seconds. Values of

for the variables gave a period of roughly

seconds and a duty cycle of

providing the closest fit.

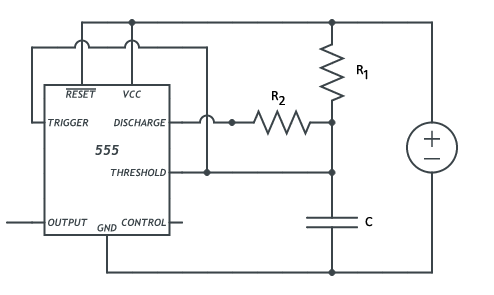

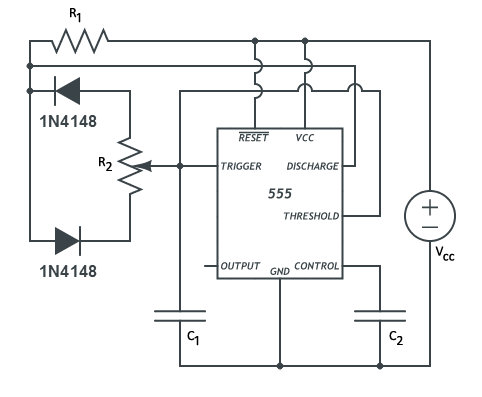

Pulse Width Modulation (PWM)

|

In order to control how fast the motor rotates, we’ll take advantage of the fact that the motor’s speed is linearly proportional to the voltage supplied to the motor and that the average output voltage of a PWM signal is linearly proportional to its duty cycle. To realize that plan, a 555 integrated circuit in astable mode is used to generate a high frequency signal whose duty cycle is controlled by the use of a potentiometer.

The fundamental operation of the 555 integrated circuit remains unchanged, however, the PWM circuit has a different topology than the timing circuit’s and as a result, has different timing characteristics. When the circuit is initially charging, current will go through , the bottom diode,

and

until the threshold values bound has been reached. Then, during the discharge phase, current will then travel through the complementary side

of the potentiometer, the top diode to the discharge pin until the trigger voltage has been reached.

Using values of ,

and

we find the following:

| Characteristic | Time (seconds) |

|---|---|

| Initial ramp up time | |

| Discharge times | |

| Subsequent charge times |

Thus, we’ll end up with a circuit running at with a duty cycle range of

giving a very broad range of voltage values that can be chosen at run time.

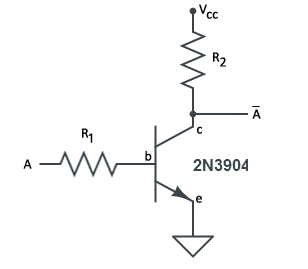

NOT-Gates and Resistor-Transistor Logic (RTL)

|

To begin talking about the logic used to combine the PWM and timing signals, we’ll need to perform negation of a signal, , into

. The simplest such way is to use a RTL based NOT-Gate as depicted above. Assuming

is logical true, and zero volts is logical false, then when

, then we’ll switch the transistor on so that

, and vice versa when

to switch it off so that

.

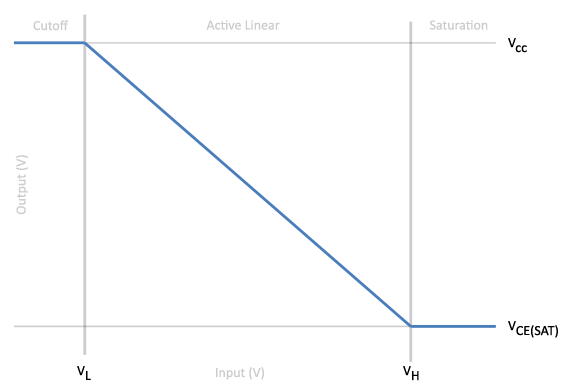

To determine the values of the resistors, we’ll need to look at the low-frequency, large signal model of the transistor which consists of three states: cut-off, active-linear and saturation. For the logic gate, we’ll want to minimize the active-linear region and focus on flip-flopping between the cut-off and saturation regions based on the value of .

For the output to be logically true, , the input voltage must be less than or equal to the saturation constant between the base and emitter,

. This condition will put the switch into a cut-off state.

If however, the input voltage is greater than , then the transistor will be in saturation mode and from Kirchhoff’s current laws we’ll see that the current for the base and collector are:

Based on the condition that the ratio of the collector current and base current be less than the gain, , we’ll find that

|

Given the latest piece of information, it’s possible to decide on a value of one resistor and pick the value of the other such that the collector resistor is several times larger than the base resistor and greater than to ensure that the max current,

, into the transistor is not exceeded. Based on these criteria, I went with

.

Logic

|

Given the timing signal and the PWM signal, we’ll produce a composite signal that will retain the period of the timing signal while controlling the observed amplitude from the PWM signal. This will give a signal that can be used to control one of the terminals of the gearmotor. Since the gearmotor terminals are designed to have one be in a ground state while the other in a high state, there will be a second composite signal that is simply the composite of the negation of the timing signal with the PWM signal.

|

Since there were multiple signals to conjoin, I opted to use the 7408 quad, two-input AND-gate integrated circuit based on Transistor-transistor logic (TTL), a more efficient way of approaching the problem. I could have just as well used RTL to perform the conjunction, but the protoboard real estate required exceeded what I’d allocated and it simplified the design. There were NOT-gate integrated circuits that I could have used as well (e.g., 7404), but I decided to use the RTL based solution since it gave me an opportunity to learn the basics of transistors which would be required to understand the motor driver.

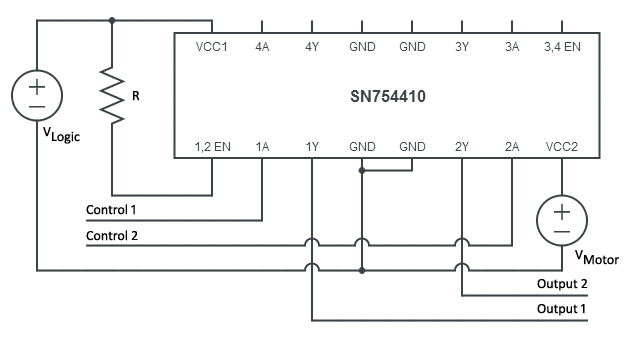

Driving the Motor

|

With the motor controller specified, it’s time to look at the motor driver. The gearmotor will draw a fair amount of current and since most of the logic circuitry is designed for low current consumption, it’s not feasible to drive the motor using the controller. Instead, an H-Bridge will be used to supply enough current while isolating the controlling logic.

An H-Bridge consists of several transistor switches and fly back diodes for controlling the flow of current. The motor is designed to rotate clockwise or counterclockwise depending on the polarity of the charges applied to the terminals. Since we’ve got two signals that designate which terminal is ground and which is high, it’s a matter of feeding the signals to the inputs of the bridge that correspond to rotating in the desired direction.

I’d put together two designs, one using bipolar junction transistors (BJT) and one using the SN754410 quadruple half-H-bridge integrated circuit. The first required a handful of components and wanting to better understand transistors, I opted to go this route for prototyping. In creating the production protoboard I decided to go with the SN74410 for reason’s I’ll cover in that section. As far as the design is concerned, they are functionality identical for exposing a higher voltage source to a motor while insulating the lower voltage controller circuitry.

Completed Motor Controller

|

With a full list of schematics for the motor controller, the next step is to design the circuit that will be soldered to the controller’s protoboard. The motor controller will sit in one of the legs of the product and reside on a protoboard. With the limited real estate, it is necessary to utilize each position on the protoboard. To do this, the PWM and timing circuits occupy the right-hand side of the board, while the logic circuitry occupies the left side. Two block terminals are used to route input and output signals for the logic voltage and ground, and the two output signals. The motor driver itself will reside along with the circuitry for the LEDs which will be covered in the next section.

LED Control

Overall, the product consists of a single red LED for the eye, three blue LEDs for the chest and three green indicator LEDs used for debugging the circuit. The eye LED is always on, and a constant charge is always supplied when the product is on. The three LEDs are driven by a triangle oscillator and each of the indicator lights by their respective signals. One complication in designing the indicators is that the idealist view of driving them with

and switching them on and off with a transistor switch controlled by their respective signals consumes a lot of protoboard real estate. As a compromise, the indicators are driven by their signals.

Since the triangle oscillator represents the bulk of the circuit, this section will be dedicated to its analysis, operation and characteristics.

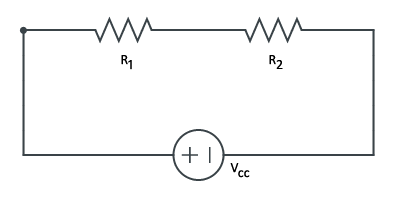

Voltage Divider

Since we’ll be using a single supply operational amplifier design to control the chest LEDs, we’ll need to create a reference voltage between the supply and ground. This will be achieved using a voltage divider. By Kirchhoff’s voltage law we have which means that the voltage difference from left to center across the first resistor is

and

across the second resistor to ground. Since we want a voltage half that of the reference,

so that

.

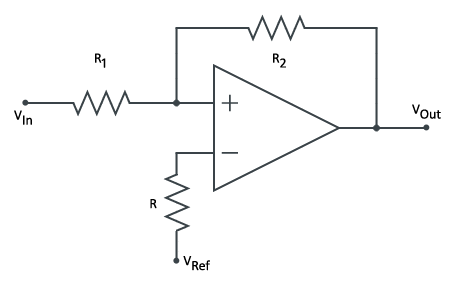

Non-inverting Schmitt Trigger

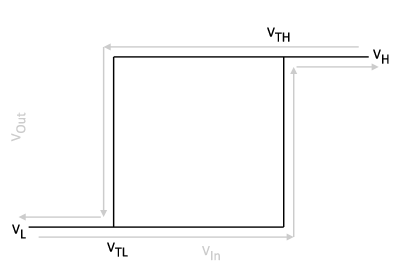

|

In the timing section we focused on creating a square wave output using a 555 timer. While this is one way to go about it, another is to use an operational amplifier based non-inverting Schmitt Trigger. The idea is that for a given voltage input, , the output,

, will be either the operational amplifier’s rail low,

, or rail high,

, voltage depending if the input voltage is increasing or decreasing past either the transition to lower,

, or transition to higher,

, voltage thresholds.

|

In this configuration, the operational amplifier is used as a positive feedback loop, in which case there are two defining characteristics of its ideal behavior: (1) the output voltage of the amplifier, , is linearly proportional to the difference between the two terminals on the order of the amplifier’s gain,

, (2), there is no current flowing in to either of the terminals,

. Based on these assumptions, we’ll apply Kirchhoff’s current law to the non-inverting terminal of the amplifier to determine the appropriate values for

and

.

There are two cases to explore, in the first, we’ll assume . For that to be true,

due to characteristic (1). In the second, we’ll assume that

, which means

for the same reasons. Based on these two different sets of assumptions we find the following relationships.

On its own, the circuit won’t generate a square wave, but as the input varies with time, the circuit will flop-flop between ground and the supply voltage. The timing of which will be determined in part by the circuit’s noise immunity, i.e., the difference between the thresholds.

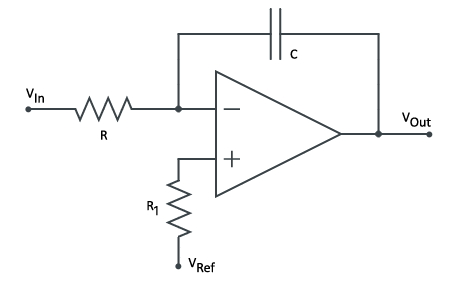

Inverting Integrator

|

To complete the triangle oscillator, we’ll need to review the inverting integrator based on an operational amplifier. The idea behind the circuit is that as the input voltage varies with time, the output voltage will be the negated accumulation of that input.

The inverting integrator is an example of a negative feedback loop. The characteristics that applied to analyzing a positive feedback loop also apply to analyzing a negative feedback loop with the additional characteristic that (3) the voltage of the two terminals is identical, .

Par for the course, we’ll start by applying Kirchhoff’s current law to the inverting terminal of the operational amplifier.

Let’s assume that the input voltage can only take one of two values and

and that the reference voltage is half the maximum of these two voltage,

. Based on these assumptions the observed output voltage is then:

When the output will decrease linearly and when

the output will increase linearly. If we uniformly toggle back and forth between these two values, then the output voltage will produce a triangle wave.

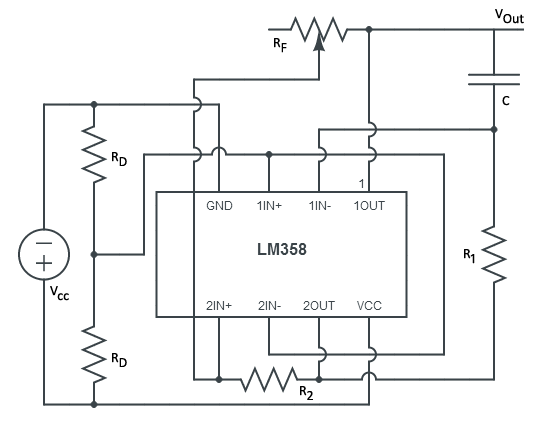

Triangle Oscillator

|

Now that the Non-inverting Schmitt Trigger and Inverting Integrator have been covered, it’s time to loop the two together so that the trigger’s output feeds into the integrator’s input and its output into the trigger’s input. Assuming that when the circuit is started that the trigger’s output is the high state, the input – and hence the integrator’s output- has to be greater than the trigger’s upper threshold. (The complementary set of events would take place if we had instead assumed that the trigger was originally outputting a low state.)

As the trigger’s output continues to be the high state, the inverting integrator’s output will linearly decrease over time. This output will continue to be fed back into the trigger until the trigger’s lower threshold is surpassed. Once this happens, the trigger’s output will be the low state. As the trigger’s output continues to be in the low state, the integrator’s output will linearly increase over time. This output will continue to be fed back into the trigger until the trigger’s upper threshold is surpassed. The trigger’s output will then be the high state and the whole cycle will repeat itself.

|

The completed circuit consists of a voltage divider, trigger and integrator around a LM358 dual operational amplifier. To provide a wide range of frequencies, I opted to use a potentiometer to control the frequency of the output rather than relying on a single fixed value resistor. This was done since I didn’t know what would be the ideal frequency and it bought me a range of solutions and not just one.

Based on the equations derived, the frequency and maximum outputs of the system are:

Using values of ,

and

, buys a frequency range of

. For the purpose of flashing a series of LEDs, this is sufficient. As far as the extrema of the output voltage is concerned, we are looking at:

The design will use blue LEDs which come with a voltage drop of about meaning that for a value of

we should expect fairly triangular output in the lights, but as that value increases, and the resulting output window narrows, we’ll see only blips of light fade in, then out followed by a period of darkness before cycling.

Completed LED Controller

|

All of the LEDs in the product in the chest cavity of the body and reside on a protoboard. While there are numerous positions, most of the components on the protoboard were required to be in specific locations and took up a fair amount of space. The triangle oscillator took up the left-hand side of the circuit with the motor driver taking up the right-hand side. The top of the circuit consisted of the chest and eye LEDs and the bottom of the circuit had input and output block terminals for taking in logic and driving voltage as well as ground, and the two motor control signals. The output block terminals are then connected to the motor.

Power Control

In designing the electronics for managing the power in the product, I chose to provide two separate voltage sources: two AAA batteries giving a combined and a single

battery. The former is used to power a boost converter up to

for the purpose of powering all of the logical circuits in the product; the latter is used to power a

linear regulator for the purpose of powering the LEDs and motor. Both voltage sources are controlled by a single latching push button.

Of the subcomponents in the circuit, the boost converter is the most interesting; this section will be primarily devoted to discussing its analysis, operation and characteristics.

Series-Parallel Resistor-Inductor-Capacitor (RLC) Circuits

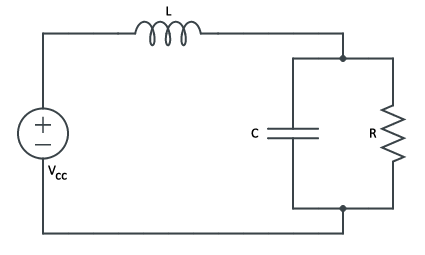

To begin talking about the boost converter, it’s necessary to talk about the series-parallel RLC circuit which differs from the standard series and parallel circuit topologies in that the inductor runs in series to a resistor and capacitor in parallel.

|

While the topologies may differ, the characteristics used to simplify the analysis of RLC circuits remain the same. When convenient, the following substitutions will be made:

| Characteristic | Equation |

|---|---|

| Natural frequency | |

| Dampening attenuation | |

| Dampening factor | |

| Dampened natural frequency |

Based on Kirchhoff’s voltage law the voltage source is the sum of the voltage across the inductor and that of the RC subcircuit. Kirchhoff’s current law says that the amount of current flowing through the inductor is the same as the aggregate current flowing through the capacitor and resistor. Based on these assumptions, we arrive at the following second order linear nonhomogeneous ordinary differential equation:

The general solution for a differential equation of this form is to take the superposition of the homogeneous solution with the particular (nonhomogeneous) solution. For the former we’ll use the characteristic equation of the homogeneous equation and then the method of undetermined coefficients for the latter.

Since we have a second order equation, we can run into repeated real roots (critically damped ), unique real roots (overdamped

) and unique complex roots (underdamped

) when solving the characteristic equation. Since the end goal is to understand to explore the boost converter, only the underdamped case will be reviewed. For the particular solution,

, we have a constant forcing function,

, so the method of undetermined coefficients says we’ll end up with a constant valued particular solution. Taking these results together we arrive at:

In order to determine the coefficients’ values, we’ll need to determine the initial conditions of the system. Initially, the inductor will resist any change in current, so since there is no current, the initial current is zero, . If no current is following, then the inductor acts briefly like a switch and the voltage is then zero,

. Looking at the limiting behavior of the circuit, the inductor will turn into a plain connection and the capacitor will become fully charged and disappear from the circuit; this means that the steady state voltage will become the source voltage,

. As a result we end up with the following solution:

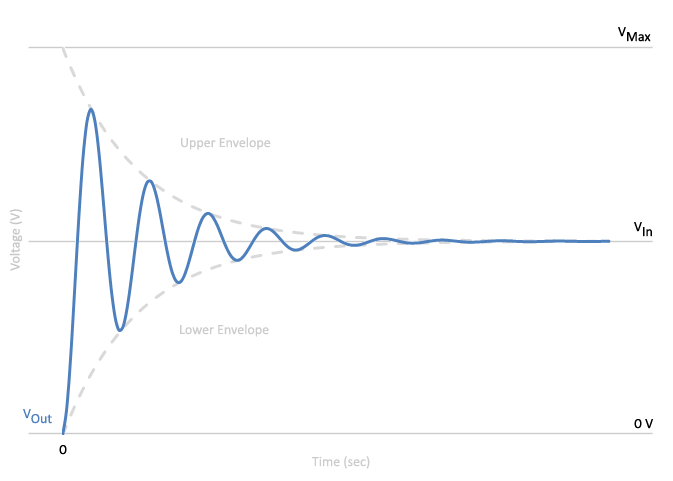

|

Looking at the voltage over time we see that the output voltage is greater than the input voltage at the beginning of the circuit’s uptime and as time elapses, the output voltage converges to the input voltage. The peak output voltage will be observed very early on at :

We’ll leverage this behavior to get the boost in voltage from the boost converter.

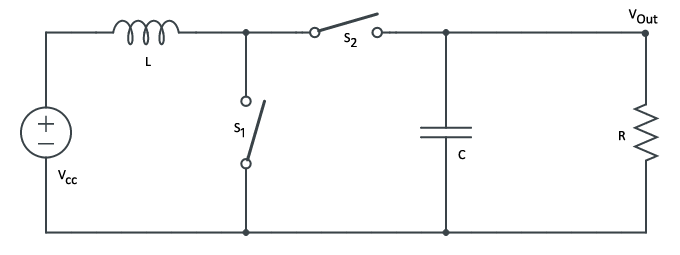

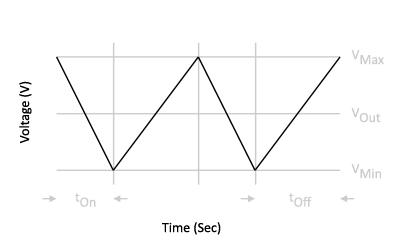

DC-DC Boost Converter

|

The boost converter is a way of converting an input voltage to a higher output voltage by switching between two different states that charge and discharge the inductor’s magnetic field. In the charging state, is switched closed and

is switched open for a period of time

resulting in two isolated circuits consisting of a single voltage supply and inductor circuit and a RC circuit. The RC circuit will discharge to produce a decreasing output voltage. In the discharge state,

is switched open and

is switched closed for a period of time

resulting in a series-parallel RLC circuit that produces in an increasing output voltage. The output voltage is therefore the average of voltage over switching between the two states.

|

For a thorough analysis of the boost converter, you should refer to Wens and Steyaert from which the following input-output voltage relationship is attributed.

As the duty cycle increases from zero to one, the output voltage will start off as the input voltage and increase towards infinity. Realistically though, this is not obtainable and a 5x multiple is a more reasonable upper bound.

3V to 5V Boost Converter

To realize this boost converter design, I went with the Maxim MAX630 to serve as the first switch in the system and a 1N4148 diode to serve as the second switch. (The diode functions as a switch by only allowing current to move in one direction.) According to the Maxim datasheet, the MAX630 works by monitoring the voltage on VFB and when it is too low, the MAX630 oscillates its internal N-channel MOSFET at a high frequency open and shut on LX to put the system into the charging state. Once VFB is above the desired voltage, LX is left open to put the system into the discharging state. This cycle repeats until the system is powered off.

|

Due to the oscillatory nature of the charging phase used by the MAX630, the analysis that was performed for the series-parallel RLC circuit is cumbersome to use here to determine the appropriate values for the passive components. Fortunately, the MAX630’s datasheet had a schematic for a to

boost converter utilizing an inductance of

and capacitance

. The voltage dividers on the left-hand side of the schematic are used for low battery detection and the voltage divider on the right-hand side is used in reference to the voltage comparison done by the VBF input. Based on the datasheet these values come out to be

,

,

and

.

Completed Power Controller

|

The power controller will sit in one of the legs of the body and reside on a protoboard. The voltage regulator sits on the left-hand side of the circuit while the boost converter occupies the right-hand side. In between are the block terminals for taking in the on-off switch, grounds,

and

supplies. Above that block terminal is the output terminal providing

,

and ground.

Building Phase

Sourcing

|

One thing that surprised me perhaps more than anything about this project was how difficult it was to find the right parts having the desired characteristics. Overall, I had orders with about a half dozen vendors from here in the United States and abroad.

3D Printing services were carried out by Shapeways, Inc. out of New York, New York. After receiving my package, I noticed a missing piece. After contacting their customer service they were able to resolve the matter and ship me a replacement part. Evidently since the missing piece was inside the main shell the operator didn’t see it on the reference, so it didn’t get shipped. The hiccup delayed me by about two weeks, but nonetheless, they made right by the mistake.

The Acrylic plates used on the front of the product were sourced from TAP Plastics of Mountain View, California. Painting supplies and adhesives and additional finishing tools were acquired from McGuckin’s Hardware store of Boulder, Colorado.

Machine elements were received from McMaster-Carr of Elmhurst, Illinois. They had quite possibility the fastest order placement to shipping time I’ve ever seen. I’d love to see the system that powers that operation. Additional elements were acquired from various Amazon merchants and local big-brand hardware stores. Gearmotor was purchased from Sparkfun Electronics of Boulder, Colorado.

Electronic components were primarily received from Mouser Electronics of Mansfield, Texas. Their selection and speed of shipping were superb. Additional components were purchased from electronics store J. B. Saunder’s of Boulder, Colorado when I needed something quickly and from various Amazon merchants.

In terms of cost of these parts, buying in bulk and in single orders saves on per item cost and on shipping. Buying just the components needed would have ended up being more expensive than buying them in bulk. In effect, multiple versions of the product could be produced cheaper than just producing one.

Prototyping

Industrial Design

|

To develop a sense of how the product would come together, it was helpful to construct a cardboard based version of the final product based off the measurements I’d put together during the design phase. This enabled me to understand proportions, and the working space for the electronic and mechanical components. It also helped remind me that I was working towards a well-defined end goal.

Mechanical

As in the previous section, I also put together some cardboard based prototypes of the drive system. This consisted of a couple cardboard gears, a mocked up motor and a couple straws. Not being very savvy when it comes to all things mechanical, it was helpful to see the parts in action before committing to anything.

Once I had purchased the machine elements I wanted to see how the shafts and everything would mesh together so I decided to make a wooden version of the motor carriage. My thinking here was that if it was easy enough to make I could skip having that component 3D printed to save on cost. After a few trips to the lumber store, some careful drilling and wood glue, the motor carriage was put together and I was able to verify that the axle and motor assemblies would mesh together and be capable of reliably holding everything in place.

From this exercise I concluded that it wasn’t worth the extra effort to really spend a lot of time on the wooden version. I simply didn’t have the right tools or workspace to get the kind of precision that would be needed to make everything run smoothly so I proceeded to think about what the 3D printed version would look like.

Electronics

Working with the electronics was a bit of a steep learning curve to traverse, but as time went on, it became easier to translate circuit diagrams to the breadboard. Coming from a software background, I put together the circuits in as modular a way as possible to facilitate testing of sub circuits in isolation. This made it significantly easier than attempting to debug issues with the circuit as a whole.

Production

Industrial Design

|

3D printing of the product was done by laser sintering. This is a process where a thin layer of ESA Polyamide 2200 is laid down and then the cross section of the product is heated to bond the material together with a new layer added and the process repeated until the volume is rendered.

After a month of modeling the product in OpenSCAD, the resulting STL file was submitted to be printed. After ten days, the product was fabricated and delivered. As an observation, the end result had a look and feel similar to that of a sugar cube. Overall, the detail on the product came out crisp and only those openings whose diameter was less than 2mm ended up coming out slightly deformed on one side. The rest of the details came out well. The back plate logo and copyright text as well as the interior WEEE symbol, RIC symbol and copyright text came out crisp and legible despite having fine details.

|

From here, it was time to undertake the process of giving the exterior of the model an aluminum looking finish. This was achieved by applying an aerosol primer for plastics and several coats of a metallic paint that formed a firm film of enamel that added some extra strength to the body. In between coats, the body was sanded down with finer and finer grit to remove any imperfections or inconsistencies introduced during the spray paint process. I decided to keep the striations from the printing process since it gave the finished product a more believable brushed metal look. I didn’t paint the interior since I didn’t want any miniscule metallic flakes from the paint to potentially interact with the electronics.

|

The acrylic for the eye and chest plate was cut by hand and seated into the body with an epoxy for binding acrylic to plastics. To give the chest plate the same look as the original illustration, several layers of electrical tape were placed on the back of the chest plate and the openings were cut out with an X-Acto knife. Each of the mechanical and electronic components that were part of the body was then secured with additional adhesives. To make sure the mechanical components lined up properly I threaded an aluminum shaft through the ball bearings and then glued each bearing to the body or bracket. Once the adhesive had dried, it was easy to slowly pull the shaft back out.

Mechanics

|

The final production work on the mechanics dealt with securing the pinion gear to the motor shaft, motor to the chassis, bevel gears and machine elements to the shafts and finally securing the arms. One of the major complications in putting the mechanical system together was the fact that many of parts came from different vendors and possessed a mixture of imperial and metric units. As a result, things were done in more of a roundabout way than I would have liked to realize the original design. C’est la vie.

Starting with the gears themselves, the bevel gear had racetrack shaped interior diameter of about 4mm. The closest aluminum dowel that would fit was 5/64” in diameter. Being a nonstandard imperial diameter, I went with a 3/16″ diameter rod since it was a more prevalent diameter among the hardware vendors. To compromise, the bevel gears were attached and centered to the 5/64” dowel with adhesive and left to set. Once set, they were then placed and centered inside the 3/16″ rod and fixed with adhesive.

The pinion gear and the motor’s shaft both had a 3mm radius, but the shaft was D-shaped since it was intended to mate with a RC car wheel. (Coincidentally, the gears were part of a larger differential gear set intended for a RC car.) Despite the mismatch, the pinion and motor shaft shared the same diameter, so it was easy to secure the pinion on the shaft with adhesive.

You’ll note that fewer shaft collars were used as a consequence of this complication which was primarily rooted in my choice of gears. I didn’t have many options when it came to gears, and I went with the best of my worst options since it was cheaper to purchase the gears as a set, than it was to go out and buy all the gears individually for far more than I was willing to pay. Nonetheless, everything came together within reason.

Electronics

|

Transcribing each portion of the prototype from the breadboard over to the protoboard was a challenge that lasted for a few months. I’d spent a fair amount of time and was becoming fatigued by the experience and had hit a low point in terms of morale and motivation. As a result, I made mistakes that I shouldn’t have been making and I recognized I needed to change what I was doing if I was going to finish the project. After taking a two week break and thinking I’d gotten things under control by double and triple checking my designs and taking my time to make sure I wasn’t putting parts in backward or offset or connecting parts together that shouldn’t be by accident, I ran into a major problem.

I could not identify a short in my original BJT based H-bridge design. After reviewing the protoboard layout, breadboard layout, the design, my reference material and datasheets, I was stumped. This went on for weeks and I realized that this was just something more involved to get right that I had led myself to believe and that I needed to move on. As a result, I compromised on the design and decided to use a quad half H-bridge integrated circuit in place of a BJT based H-bridge design.

I also concluded that I needed to change my approach to the power management for the project. I felt that drawing current from a single voltage supply for both the logic and drive wasn’t the right thing to do and that I need to split these concerns into their own dedicated voltage supplies. Not wanting to just throw another battery into the mix, I decided I would go with the boost converter off of a

supply in order to supply

to the logic components while leaving the existing

supply to be regulated down to

for the motor and LEDs.

In retrospect it wasn’t the right decision since it meant adding complexity to the end result. It also meant desoldering a lot of work and spending additional time and money on new parts and a new design. But at this point, I had committed to the change and proceeded. After receiving the new parts I went through another round of testing on the new designs on the breadboard and concluded that the changes would work and proceeded to solder the changes to the protoboards.

Ironically, the boost converter and quad half H-bridge integrated circuit were the easiest things to map to the protoboard and any doubt that I could not get the final electronics to work were gone. Despite the big change and the frustration, I felt hat I had turned things around and was back on track.

Having finished the protoboards I fastened them to the back plate and made sure there was enough room in the body for everything to fit. I’d given myself some room between the machinery and the electronics, but not enough for the wires to lie in between. With some electrical tape I was able to bind the wires tightly and secure everything in its place and was finally ready to test drive the end result.

Evaluation Phase

Testing

Having put so much effort into the body of the product, I didn’t really have the heart to subject the 3D printed bodies through any serious stress tests. In handling the material and developing a feel for it, I didn’t develop an impression that it was overly fragile; it withstood several rounds of aggressive sanding, boring and drilling without fracturing and warping. For me, this was good enough for something that would ultimately find a home on my bookshelf.

To identify any problems with the machinery of the product, I oriented the arms of the robot in to the various positions I talked about in the requirements section to see how it would behave. All the elevated orientations resulted in the arms swinging down then being driven by the machinery. I attribute this mainly to how the arms were fastened to the shaft by being pinched between two shaft collars and padded from the shaft with some electrical tape. In one sense this was good since it was putting too much strain on the motor, but on the other hand disappointing. Despite this complication, I decided that I was ok with just having the arms hanging down and shuffling back and forth.

The second big part of the character of the product was the illumination of the eye and chest. The eye ended up having plenty of light while the chest merely flickered on and off. Changing the power supply design and dimensions of the body resulted in a reduced voltage and increased displacement resulting in the diminished output. Since things were already soldered, I chose to leave things as is.

Future Work

The end result here isn’t without flaws. Working through the project I recognized along the way that there were things I hadn’t done quite right or that just didn’t sit with me well. The following is a list of things I would try to keep in mind next time I take on a project like this.

As far as the 3D printing process went, I would go back and redo how I incorporated the latching mechanism for the motor chassis and back plate. The biggest problem here was that post fabrication modifications had to be made since I incorrectly understood the tapping process. Nothing ruins precision faster than making changes by hand.

I had overlooked the theory behind illumination and had instead focused more on intuition. In the future I would spend more time reading up literature on the right amount of light to use based on what I wanted to illuminate and the different techniques that exist for providing different types of coverage. In retrospect, I think this would have given the end result a more polished look.

The mechanical work was complicated by the impedance between the imperial and metric standards of the parts involved. Part of this was poor planning on my part; part was difficultly finding the right parts at a hobbyist price point. Nonetheless, I’d like to continue to develop my understanding of mechanical systems and how they can be incorporated into electronically driven solutions.

I would have also incorporated some wiring management directly into the part so that it was less of a hassle to fit the back plate to the body with everything. I’d also switch to an existing cable management system instead of relying on screw terminals so that it was easier to snap things together and give the board a lower profile to save on space.

I’d like to explore printed circuit boards the next time I approach a project like this. My knowledge of circuit design going into this was limited, and it would have meant a lot of wasted time, material and money had I gone ahead and ordered PCBS this round. Given that I now have a working model to base future work, I would like to explore this route in the future.

As far as the on-off functionality goes, next time I think I will use a series of relays to switch access to the voltage supplies whenever a momentary push button is engaged. I think this would lead to a cleaner separation of the two voltage supplies.

The timing circuit was complicated by the initial ramp up time giving rise to a slow initial rotation until the threshold was reached to go in to astable mode. In the future I’d like to come up with a way to eliminate that initial ramp up from showing up in the output of the arms. Related to this, I’d like to be able to control the length of the timing pulses to swing between clockwise and counterclockwise rotations. In all likelihood, I’d use a microcontroller since it would give me the greatest range of flexibility.

Conclusion

With the finished product sitting on my bookshelf and reflecting on this project, the seasons it encompassed and the ups and downs I worked through, I have developed a greater appreciation for mechatronics, the physical product design cycle and the work people put into everyday products.

Taking the time to make something tangible for a change presented me with a number of challenges that I hadn’t had to face before and that’s what I enjoy most about these kinds of projects. It’s really about developing a new set of tools, techniques and thinking that I can apply to problems that arise in my personal and professional work.

This project allowed me to explore a number of interesting concepts within the framework of a seemingly simple toy. Let’s iterate over the main bullet points:

- Analog circuit design- complete analysis and use of passive components coupled with semiconductors with first real exposure to transistors, operational amplifiers and 555 timers.

- Protoboard design, soldering, debugging and desoldering techniques.

- Exposure to driving DC Motors using various techniques.

- Better understanding of hardware development and the product design process.

- Learned about industrial design guidelines and techniques for making cost effective products using 3D printed materials.

- Use of the finite element method to perform stress analysis of a complex geometric object. (Finally had an excuse to learn tensors.)

- Learned how to use an assortment of CAD, CAM and CAE software solutions.

Overall, the project produced a number of positive outcomes. As a stepping stone, this project has left me wanting to explore mechatronics more deeply and I’ve got a number of ideas brewing in mind that could lead to more advanced “toys” in the future. I feel confident that I can take the lessons learned from this experience and avoid pitfalls that I might encounter in more advanced projects of similar focus going forward. For now, those ideas will have to wait as I return to my world of code and numbers.

About the Author

|

Garrett Lewellen is a software developer working at a private start-up in the Denver Metro Area designing and developing SaaS-based systems. With eight years’ experience and formal education in computer science with emphasis in applied mathematics, his primary interests lie in the application of statistical models to problems that arise in general computing. When he’s not working on projects, he’s out exploring the Rocky Mountains and enjoying the great outdoors. |

Copyright

“3D Printed Toy Robot” available under CC BY-NC-ND license. Copyright 2013 Garrett Lewellen. All rights reserved. Third-part trademarks property of their respective owners.

Bibliography

Part and Mold Design. [pdf] Pittsburgh, PA: Bayer Material Science, 2000. Web.

“A.5.8 Triangle Oscillator.” Op Amps For Everyone Design Guide (Rev. B). [pdf] Ed. Ron Mancini. N.p.: n.p., 2002. N. pag. Texas Instruments, 22 Aug. 2002. Web.

“Boost Converter.” Wikipedia. Wikimedia Foundation, 09 July 2013. Web. 15 Sept. 2013.

“What Is PWM? Pulse Width Modulation Tutorial in HD.” Electronics Tutorial Videos. N.p., 28 Nov. 2011. Web. 11 Sept. 2013.

Amado-Becker, Antonio, Jorge Ramos-Grez, María José Yañez, Yolanda Vargas, and Luis Gaete. “Elastic Tensor Stiffness Coefficients for SLS Nylon 12 under Different Degrees of Densification as Measured by Ultrasonic Technique.” [pdf] Rapid Prototyping Journal 14.5 (2008): 260-70. Web.

Chaniotakis. Cory. “Operational Amplifier Circuits Comparators and Positive Feedback”. [pdf] 6.071J/22.071, Introduction to Electronics, Signals, and Measurement. Spring 2006 Lecture Notes.

Cook, David. “Driving Miss Motor.” Intermediate Robot Building. 2nd ed. Apress, 2010. N. pag. Print.

Cook, David. “H-Bridge Motor Driver Using Bipolar Transistors.” Bipolar Transistor HBridge Motor Driver. N.p., n.d. Web. 11 Sept. 2013.

EOS GmbH – Electro Optical Systems, “PA 2200”: [pdf] Material sheet, 2008.

Demircioglu, Ismail H. “Dynamic Model of a Permanent Magnet DC Motor”. [pdf] 11 Aug. 2007.

Jung, Walt, ed. Op Amp Applications Handbook. N.p.: Analog Devices, 2002. Web.

Kim, Nam H., and Bhavani V. Sankar. Introduction to Finite Element Analysis and Design. 1st ed. New York: John Wiley & Sons, 2009. Print.

Lancaster, Don. RTL Cookbook. [pdf] 3rd ed. Thatcher, Arizona: Synergetics, 2013. Web. 11 Sept. 2013.

Maksimović, Dragan. “Feedback in Electronic Circuits: An Introduction”. [pdf] ECEN 4228, Analog IC Design. Lecture Notes 1997.

Mantzel, Jamie. 3D Print Big Robot Project No. 1. N.d. Youtube.com. 11 Mar. 2012. Web.

Maxim, “CMOS Micropower Step-Up Switching Regulator”, [pdf] MAX630 datasheet, Sept. 2008. .