Posts Tagged ‘C-Sharp’

Using GPUs to solve Spatial FitzHugh-Nagumo Equation Numerically

Introduction

Modeling Action Potentials

In neurophysiology, we wish to model how electrical impulses travel along the complex structures of neurons. In particular, along the axon since it is the principle outbound channel of the neuron. Along the axon are voltage gated ion channels in which concentrations of potassium and sodium are exchanged. During depolarization, a fast influx of sodium causes the voltage to gradually increase. During repolarization, a slow outflux of potassium causes the voltage to decrease gradually. Because the potassium gates are slow to close, there is a negative voltage dip and recovery phase afterwards called hyperpolarization.

Attempts to provide a continuous time model of these phases began with work in the 1950s by Hodgkin and Huxley [HH52]. The duo formulated a four-dimensional nonlinear system of ordinary differential equations based on an electrical circuit model of giant squid axons. This landmark contribution was later simplified in the 1960s by FitzHugh [Fit61]. Here FitzHugh casted the problem in terms of Van der Pol oscillators. This reformulation allowed him to explain the qualitative behavior of the system in terms of a two-dimensional excitation/relaxation state-space model. The following year Nagumo [NAY62] extended these equations spatially to model the desired action potential propagation along an axon, and demonstrated its behavior on analog computers. This spatial model will be the focus of this work, and a more comprehensive account of these developments can be found in [Kee02].

FitzHugh-Nagumo Equation

The Spatial FitzHugh-Nagumo equation is a two-dimensional nonlinear reaction-diffusion system:

| (1) |

Here represents the action potential (voltage) along the axon, and

the recovery of the system. Constant

is absent from the literature, and

is inversely proportional to recovery time.

Mathematical Analysis

The system does not admit a general analytic solution. Mathematical analysis of the system is concerned with the existence and stability of traveling waves with [AK15] providing a thorough account of these aspects. Other analyses are concerned with the state-space model and the relationship of parameters with observed physiological behavior. In particular: self-excitatory, impulse trains, single traveling wavefronts and impulses, doubly traveling divergent wave impulses, and non-excitatory behavior that returns the system to a resting state. Since the FitzHugh-Nagumo equations are well understood, more recent literature is focused on higher dimensional and stochastic variants of the system which [Tuc13] discusses in detail.

Numerical Analysis

A survey of the literature revealed several numerical approaches to solving the FitzHugh-Nagumo equations consisting of a Finite Element method with Backward Differentiation Formulae [Ott10], and the Method of Lines [Kee02] approach. In this work, three approaches based on the Finite Difference method are investigated: an explicit scheme, an adaptive explicit scheme, and an implicit scheme; along with their associated sequential CPU bound and parallel GPU bound algorithms.

Explicit Finite Difference Scheme

To study the basic properties of the FitzHugh-Nagumo equations an explicit scheme was devised using forward and central differences for the temporal and spatial derivatives respectively.

| (2) |

Truncation errors are linear in time, , and quadratic,

, in space. For stability,

, implying the use of inconveniently small time steps, however in practice it seems rare to find any mention of

in the literature. Each time step can be computed sequentially or in parallel in linear time,

. However, there is a significant constant factor improvement delivered by parallel GPU implementations since modern GPUs allow millions of nodes to be updated in parallel giving near constant runtime performance. Further parallelization is possible by trivially distributing contiguous node sets to a combination of multiple machines and multiple GPUs.

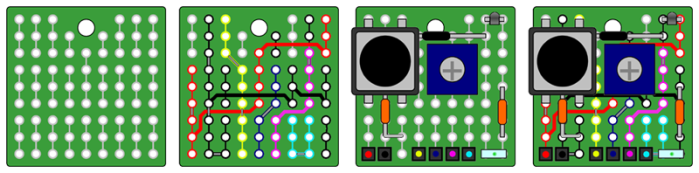

Experiments

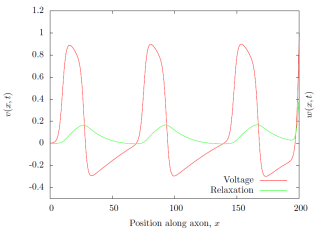

The explicit scheme was used to investigate the traveling wave and divergent wave behaviors of the FitzHugh-Nagumo equations. Fig. (1) demonstrate the application of a constant impulse, , at the end of an unexcited axon,

, giving rise to oscillatory behavior. Fig. (2) shows a Gaussian impulse applied initially to the center of the axon,

. As the system evolves, the impulse collapses giving rise to two separate impulses that travel in opposite directions before dissipating at the boundaries returning the axon to a completely unexcited state. Both test cases are qualitatively consistent with the literature and share the following parameters:

| (3) |

Error Analysis

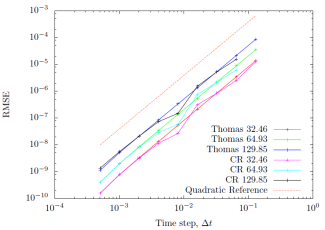

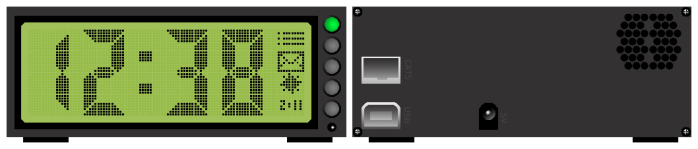

Figure 3: Varying values of |

Figure 4: Varying values of |

Two experiments were ran to verify the suggested truncation errors. The numerical solution given by a sufficiently small step size serves as an analytic solution to , which is then used to evaluate how larger steps sizes deviate as measured by the root mean squared error. Fig. (3) looks at varying

and shows that as the step size as halved, the resulting RMSE is quartered consistent with the expect quadratic truncation term of the scheme. Similarly, Fig. (4) looks at varying

and shows that independent of

, halving the step size results in an equally reduced RMSE consistent with the expected linear truncation term of the scheme.

Runtime Performance

Figure 5: Wall time for memory allocation, memory transfers, and core loop for both CPU and GPU. |

Figure 6: Wall time for just core loop for both CPU and GPU. |

Comparison of sequential CPU and parallel GPU bound algorithms is based on the wall time taken to perform a single iteration to calculate and

. Reported figures are a function of spatial node quantity and the mean run time of 30 iterations. The main bottleneck to performance is transferring buffers between the host and device. Fig. (5) illustrates this effect. For the largest test case considered,

, “GPU w/o tx” delivers 19x faster runtime over “GPU w/ tx” by not transferring intermediate results back to the host. Similarly, it delivers significantly faster runtime over the CPU by a factor of 73x. To better understand this performance boost, Fig. (6) looks at just the core loop execution time. For

, the core loops account for only 3.7% and 14.9% of the execution time on the CPU and GPU respectively with the GPU delivering 18x better performance than the CPU. These percentages increase monotonically, and suggest that memory transfers will eventually be dominated by sufficiently large inputs on GPUs with an abundance of memory.

Adaptive Explicit Finite Difference Scheme

While investigating the traveling wavefront solution of the system, numerical oscillations were observed as the simulation zeroed in on the steady state solution. To address this issue, an adaptive explicit scheme was devised. A survey of the literature suggested a family of moving grid methods to solve the FitzHugh-Nagumo system based on a Lagrangian formulation [VBSS90] and a method of lines formulation [Zwa11]. Here a heuristic is used to concentrate grid points where the most change takes place.

The formulation of the scheme is identical to the former section with second order spatial derivatives being approximated by Lagrange interpolating polynomials since the technique supports non-uniform grids. A three point, first order truncation error scheme is used. A five point, third order truncation error scheme was considered, but abandoned in favor of the empirically adequate three point scheme.

| (4) |

The first and second spatial derivatives given by the Lagrange interpolating polynomials are used to decide how much detail is needed in the domain in addition to the first temporal derivative given by finite differences. First, a coarse grid is laid down across the entire domain to minimize the expected distance between nodes since the second order derivative has first order truncation error. Next, the magnitude of the first order spatial derivative (second order truncation error) is used to lay down a finer grid when the derivative is greater than a specified threshold. This corresponds to where the waves of the solution are.

Next, the temporal derivative is used to lay down an even finer grid in those areas having an absolute change above a specified threshold. The change in time corresponds to the dynamics of the system, by adding detail in these areas, we can preserve the behavior of the system. Finally, the zeros of the second spatial derivative serve as indicators of inflection points in the solution. These correspond most closely to the location of the traveling wavefronts of the equation. Here, the most detail is provided around a fixed radius of the inflection points since the width of the wavefronts does not depend on parameterization.

Each iteration, the explicit scheme is evaluated on the grid from the previous iteration and those results are then used to perform the grid building scheme. To map the available solution values to the new grid, the points are linearly interpolated if a new grid point falls between two previous points, or mapped directly if there is a stationary grid point between the two iterations. The latter will be the more common case since all grid points are chosen from an underlying uniform grid specified by the user. Linear interpolation will only take place when extra grid points are included in an area.

Experiments

Figure 7: Top: Numerical oscillation of a centered Gaussian impulse with and all other parameters the same as those given in previous section for

. Bottom: Eliminated numerical oscillation based on the adaptive explicit scheme.

Fig. (7) is the motivating example for this scheme and demonstrates how numerical oscillations can be avoided by avoiding calculations in regions with stationary solutions. To demonstrate that the scheme works well for other test cases, the more interesting and dynamic divergent impulse test case is shown in Fig. (8). Here we can see that as time progresses, points are allocated to regions of the grid that are most influenced by the system’s dynamics without sacrificing quality. For the time steps shown, the adaptive scheme used 2-3x fewer nodes than the explicit scheme from the previous section.

Figure 8: Example of adaptive grid on the divergent impulse example for { 15.06, 30.13, 45.05, 60.12, 75.05, 90.11 }. Red lines given by the explicit scheme from the previous section, green dots given by adaptive explicit scheme.

Implicit Finite Difference Scheme

An implicit Crank-Nicolson scheme is used in this section to solve the FitzHugh-Nagumo equations. To simplify the computations, is solved explicitly using a second order central difference scheme before solving

leading to the following formulation:

| (5) |

The truncation error for this scheme is with an improved, albeit still restrictive, requirement over the explicit scheme that

. Based on this formulation, the right-hand side is completely known when

is calculated. This gives a simpler expression to consider:

| (6) |

Newton’s method is used to solve the nonlinear function with an initial guess equal to the previous time step’s values,

. To refine the estimate, the following system is solved iteratively until the magnitude of the refinement,

, is less than a specified tolerance or machine epsilon.

| (7) |

This formulation gives rise to a tridiagonal Jacobian matrix, , where the first and last row are specified by no-flux boundary conditions, and constant

off-diagonal entries and variable

diagonal entries given by the partial derivatives of each nodal equation.

|

|

|

This tridiagonal system can be solved sequentially in time using the Thomas algorithm or in

time using the Cyclic Reduction algorithm of [Hoc65]. Cyclic Reduction begins with a forward phase in which all odd indexed unknowns are eliminated recursively until a

or

system remains that can be solved directly. Cyclic Reduction ends with a backward phase that walks up the recursion stack to solve for the previously eliminated odd indexed unknowns. Since the algorithm decouples unknowns at each recursion level of the forward and backwards phases, these reductions can be done in parallel in

time on the GPU assuming

fold parallelism.

Further parallelism can be achieved by solving points explicitly along the domain, then using those results to create implicit subdomains that can be solved using either Thomas or Cyclic Reduction algorithms on a combination of multiple machines and multiple GPUs at the expense of additional communication and coordination.

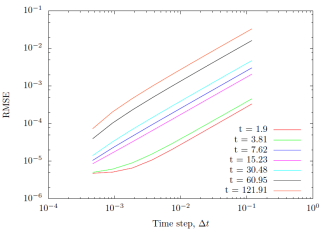

Error Analysis

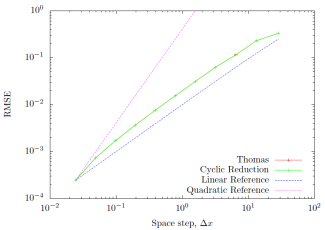

Figure 9: Varying values of |

Figure 10: Varying values of |

Evaluation of the spatial error revealed an unexpected linear behavior as shown in Fig. (9). As the spatial step is halved, the resulting error was expected to become quartered, instead it is halved. No clear explanation was discovered to account for this discrepancy. With respect to time, Fig. (10) shows that both the Thomas and Cyclic Reduction algorithms were quadratic as multiple points in time were evaluated. The Thomas algorithm produced aberrations as the step size increased eventually producing numerical instability, while the Cyclic Reduction algorithm was immune to this issue.

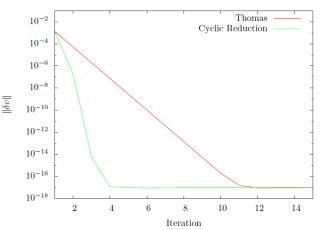

Figure 11: Stability of implicit solvers. |

Figure 12: Convergence of implicit solvers. |

In terms of stability, the implicit scheme is stable up to depending on which tridiagonal solver is used, and for comparison, the explicit scheme is stable up to precisely

as shown in Fig. (11). The Thomas algorithm demonstrates a slightly weaker stability guarantee than the Cyclic Reduction algorithm becoming unstable around

and

respectively. In terms of convergence, the Thomas algorithm typically takes more iterations than Cyclic Reduction to obtain the same degree of accuracy as shown in Fig. (12). Taking the sequence of adjustments up to machine epsilon

, Thomas algorithm gives

suggesting q-linear convergence. Likewise, Cyclic Reduction gives

suggesting q-quadratic convergence.

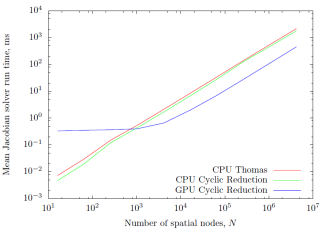

Runtime Performance

Figure 13: Performance comparison of CPU and GPU bound Thomas and Cyclic Reduction algorithms. |

Figure 14: Performance comparison of Jacobian solvers. |

Sequential Thomas and Cyclic Reduction routines perform equally well on CPU as shown in Fig. (13). The parallel Cyclic Reduction method did not demonstrate significant performance gains on the GPU. However, looking at just the time taken to solve the Jacobian each iteration (not including initialization or memory transfers), parallel Cyclic Reduction on the GPU was 4-5x faster than both sequential CPU solvers as shown in Fig. (14).

To explain the poor performance of Cyclic Reduction on the GPU, there are a number of different factors at play. The algorithm is susceptible to warp divergence due to the large number of conditionals that take place. Reliance on global memory access with varying strides contributes to slow performance since shared memory can’t be effectively utilized, and each iteration the adjustments are transferred from device to host to decide if Newton’s method should terminate. These different factors suggest alternative GPU implementations need to be investigated to address these different issues.

Discussion

Work Environment

All results in this paper were based on code written in C#, and compiled using Microsoft Visual Studio Express with Release settings to run on a commodity class Intel Core i7-2360QM quad core processor. Open source CUDAfy.NET is used to run C# to CUDA translated code on a commodity class NVIDIA GeForce GT 525m having two streaming multiprocessors providing 96 CUDA cores.

Future Work

Numerically, additional work could be done on the adaptive explicit scheme. In preparing the scheme, a cubic spline-based approach was abandoned in favor of simpler approaches due to time pressures. It would be worthwhile to explore how to solve the system on a spline-based “grid”.

In addition, further work could be done to optimize the implementation of the parallel Cyclic Reduction algorithm on the GPU since it delivered disappointing runtime behavior compared to the sequential algorithm on the CPU. [CmWH14] mention several different optimizations to try, and I believe better global memory access will improve runtime at the expense of more complicated addressing. As an alternative to Cyclic Reduction, both [CmWH14] and [ZCO10] detail several different parallel tridiagonal solvers that could be deployed.

In terms of models considered, there are a number of different directions that could be pursued including higher dimensional [MC04], coupled [Cat14]], [Ril06], and stochastic variants [Tuc13] of the spatial FitzHugh-Nagumo equation. Coming from a probabilistic background, I would be interested in investing time in learning how to solve stochastic ordinary and partial differential equations.

Conclusion

Three finite difference schemes were evaluated. An explicit scheme shows great performance on both the CPU and GPU, but it is susceptible to numerical oscillations. To address this issue, an adaptive explicit scheme based on heuristics was devised and is able to eliminate these issues while requiring fewer nodes to produce results on-par with the explicit scheme. An implicit scheme was evaluated which demonstrated a principled, and robust solution for a variety of test cases and is the favored approach of the three evaluated.

References

[AK15] Gianni Arioli and Hans Koch. Existence and stability of traveling pulse solutions of the fitzhugh-nagumo equation. Nonlinear Analysis: Theory, Methods and Applications, 113:51-70, 2015

[Cat14] Anna Cattani. Fitzhugh-nagumo equations with generalized diffusive coupling. Mathematical Biosciences and Engineering, 11(2):203-215, April 2014

[CmWH14] Li-Wen Chang and Wen mei W. Hwu. A guide for implementing tridiagonal solvers on gpus. In Numerical Computations with GPUs, pages 29-44. Springer International Publishing, 2014.

[Fit61] Richard FitzHugh. Impulses and physiological states in theoretical models of nerve membrane. Biophysical journal, 1(6):445, 1961.

[HH52] Alan L. Hodgkin and Andrew F. Huxley. A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of physiology, 117(4):500-544, 1952.

[Hoc65] R. W. Hockney. A fast direct solution of poisson’s equation using fourier analysis. J. ACM, 12(1):95-113, Jan 1965.

[Kee02] James P. Keener. Spatial modeling. In Computational Cell Biology,volume 20 of Interdisciplinary Applied Mathematics, pages 171-197. Springer New York, 2002.

[MC04] Maria Murillo and Xiao-Chuan Cai. A fully implicit parallel algorithm for simulating the non-linear electrical activity of the heart. Numerical linear algebra with applications, 11(2-3):261-277, 2004.

[NAY62] J. Nagumo, S. Arimoto, and S. Yoshizawa. An active pulse transmission line simulating nerve axon. Proceedings of the IRE, 50(10):2061-2070, Oct 1962.

[Ott10] Denny Otten. Mathematical models of reaction diffusion systems, their numerical solutions and the freezing method with comsol multiphysics. 2010.

[Ril06] Caroline Jane Riley. Reaction and diffusion on the sierpinkski gasket. PhD thesis, University of Manchester, 2006.

[Tuc13] Henry C. Tuckwell. Stochastic partial differential equations in neurobiology. Linear and nonlinear models for spiking neurons. In Stochastic Biomathematical Models, volume 2058 of Lecture Notes in Mathematics. Springer Berlin Heidelberg, 2013.

[VBSS89] J. G. Verwer, J. G. Blom, and J. M. Sanz-Serna. An adaptive moving grid method for one dimensional systems of partial differential equations. Journal of Computational Physics, 82(2):454-486, 1989.

[ZCO10] Yao Zhang, Jonathan Cohen, and John D. Owens. Fast tridiagonal solvers on the gpu. SIGPLAN Not., 45(5):127-136, Jan 2010.

[Zwa11] M. N. Zwarts. A test set for an adaptive moving grid pde solver with time-dependent adaptivity. Master’s thesis. Utrecht University, Utrecht, Netherlands, 2011.

k-Means Clustering using CUDAfy.NET

Introduction

I’ve been wanting to learn how to utilize general purpose graphics processing units (GPGPUs) to speed up computation intensive machine learning algorithms, so I took some time to test the waters by implementing a parallelized version of the unsupervised k-means clustering algorithm using CUDAfy.NET– a C# wrapper for doing parallel computation on CUDA-enabled GPGPUs. I’ve also implemented sequential and parallel versions of the algorithm in C++ (Windows API), C# (.NET, CUDAfy.NET), and Python (scikit-learn, numpy) to illustrate the relative merits of each technology and paradigm on three separate benchmarks: varying point quantity, point dimension, and cluster quantity. I’ll cover the results, and along the way talk about performance and development considerations of the three technologies before wrapping up with how I’d like to utilize the GPGPU on more involved machine learning algorithms in the future.

Algorithms

Sequential

The traditional algorithm attributed to [Stu82] begins as follows:

- Pick

points at random as the starting centroid of each cluster.

- do (until convergence)

- For each point in data set:

- labels[point] = Assign(point, centroids)

- centroids = Aggregate(points, labels)

- convergence = DetermineConvergence()

- For each point in data set:

- return centroids

Assign labels each point with the label of the nearest centroid, and Aggregate updates the positions of the centroids based on the new point assignments. In terms of complexity, let’s start with the Assign routine. For each of the points we’ll compute the distance to each of the

centroids and pick the centroid with the shortest distance that we’ll assign to the point. This is an example of the Nearest Neighbor Search problem. Linear search gives

which is preferable to using something like k-d trees which requires repeated superlinear construction and querying. Assuming Euclidean distance and points from

, this gives time complexity

. The

Aggregate routine will take . Assuming convergence is guaranteed in

iterations then the resulting complexity is

which lends to an effectively linear algorithm.

Parallel

[LiFa89] was among the first to study several different shared memory parallel algorithms for k-means clustering, and here I will be going with the following one:

- Pick

points at random as the starting centroid of each cluster.

- Partition

points into

equally sized sets

- Run to completion threadId from 1 to

as:

- do (until convergence)

- sum, count = zero(

), zero(

)

- For each point in partition[threadId]:

- label = Assign(point, centroids)

- For each dim in point:

- sum[

* label + dim] += point[dim]

- sum[

- count[label] = count[label] + 1

- if(barrier.Synchronize())

- centroids = sum / count

- convergence = DetermineConvergence()

- sum, count = zero(

- do (until convergence)

- return centroids

The parallel algorithm can be viewed as smaller instances of the sequential algorithm processing

chunks of points in parallel. There are two main departures from the sequential approach 1) future centroid positions are accumulated and counted after each labeling and 2) each iteration of

while loops are synchronized before continuing on to the next iteration using a barrier – a way of ensuring all threads wait for the last thread to arrive, then continue to wait as the last one enters the barrier, and exits allowing the other threads to exit.

In terms of time complexity, Assign remains unchanged at , and incrementing the sums and counts for the point’s label takes time

. Thus for

points, a single iteration of the loop gives

time. Given

threads, the maximum time would be given by the thread that enters the barrier, and assuming at most

iterations, then the overall complexity is

. Which suggests we should see at most a

speedup over the sequential implementation for large values of

.

GPGPU

The earliest work I found on doing k-means clustering on NVIDIA hardware in the academic literature was [MaMi09]. The following is based on that work, and the work I did above on the parallel algorithm:

- Pick

points at random as the starting centroid of each cluster.

- Partition

into

blocks such that each block contains no more than

points

- do (until convergence)

- Initialize sums, counts to zero

- Process blockId 1 to

,

at a time in parallel on the GPGPU:

- If threadId == 0

- Initialize blockSum, blockCounts to zero

- Synchronize Threads

- label = Assign(points[blockId *

+ threadId], centroids)

- For each dim in points[blockId *

+ threadId]:

- atomic blockSum[label * pointDim + dim] += points[blockId *

+ threadId]

- atomic blockSum[label * pointDim + dim] += points[blockId *

- atomic blockCount[label] += 1

- Synchronize Threads

- If threadId == 0

- atomic sums += blockSum

- atomic counts += blockCounts

- If threadId == 0

- centroids = sums / counts

- convergence = DetermineConvergence()

The initialization phase is similar to the parallel algorithm, although now we need to take into account the way that the GPGPU will process data. There are a handful of Streaming Multiprocessors on the GPGPU that process a single “block” at a time. Here we assign no more than points to a block such that each point runs as a single thread to be executed on each of the CUDA cores of the Streaming Multiprocessor.

When a single block is executing we’ll initialize the running sum and count as we did in the parallel case, then request that the threads running synchronize, then proceed to calculate the label of the point assigned to the thread atomically update the running sum and count. The threads must then synchronize again, and this time only the very first thread atomically copy those block level sum and counts over to the global sum and counts shared by all of the blocks.

Let’s figure out the time complexity. A single thread in a block being executed by a Streaming Multiprocessor takes time assuming that all

threads of the block execute in parallel, that there are

blocks, and

Streaming Multiprocessors, then the complexity becomes:

. Since

, and at most

iterations can go by in parallel, we are left with

. So the expected speedup over the sequential algorithm should be

.

Expected performance

For large values of , if we allow

to be significantly larger than

, we should expect the parallel version to 8x faster than the sequential version and the GPGPU version to be 255x faster than the sequential version given that

for the given set of hardware that will be used to conduct tests. For

to be significantly larger than

, then parallel is the same, and GPGPU version should be 340x faster than the sequential version. Now, it’s very important to point out that these are upper bounds. It is most likely that observed speedups will be significantly less due to technical issues like memory allocation, synchronization, and caching issues that are not incorporated (and difficult to incorporate) into the calculations.

Implementations

I’m going to skip the sequential implementation since it’s not interesting. Instead, I’m going to cover the C++ parallel and C# GPGPU implementations in detail, then briefly mention how scikit-learn was configured for testing.

C++

The parallel Windows API implementation is straightforward. The following will begin with the basic building blocks, then get into the high level orchestration code. Let’s begin with the barrier implementation. Since I’m running on Windows 7, I’m unable to use the convenient InitializeSynchronizationBarrier, EnterSynchronizationBarrier, and DeleteSynchronizationBarrier API calls beginning with Windows 8. Instead I opted to implement a barrier using a condition variable and critical section as follows:

// ----------------------------------------------------------------------------

// Synchronization utility functions

// ----------------------------------------------------------------------------

struct Barrier {

CONDITION_VARIABLE conditionVariable;

CRITICAL_SECTION criticalSection;

int atBarrier;

int expectedAtBarrier;

};

void deleteBarrier(Barrier* barrier) {

DeleteCriticalSection(&(barrier->criticalSection));

// No API for delete condition variable

}

void initializeBarrier(Barrier* barrier, int numThreads) {

barrier->atBarrier = 0;

barrier->expectedAtBarrier = numThreads;

InitializeConditionVariable(&(barrier->conditionVariable));

InitializeCriticalSection(&(barrier->criticalSection));

}

bool synchronizeBarrier(Barrier* barrier, void (*func)(void*), void* data) {

bool lastToEnter = false;

EnterCriticalSection(&(barrier->criticalSection));

++(barrier->atBarrier);

if (barrier->atBarrier == barrier->expectedAtBarrier) {

barrier->atBarrier = 0;

lastToEnter = true;

func(data);

WakeAllConditionVariable(&(barrier->conditionVariable));

}

else {

SleepConditionVariableCS(&(barrier->conditionVariable), &(barrier->criticalSection), INFINITE);

}

LeaveCriticalSection(&(barrier->criticalSection));

return lastToEnter;

}

A Barrier struct contains the necessary details of how many threads have arrived at the barrier, how many are expected, and structs for the condition variable and critical section.

When a thread arrives at the barrier (synchronizeBarrier) it requests the critical section before attempting to increment the atBarrier variable. It checks to see if it is the last to arrive, and if so, resets the number of threads at the barrier to zero and invokes the callback to perform post barrier actions exclusively before notifying the other threads through the condition variable that they can resume. If the thread is not the last to arrive, then it goes to sleep until the condition variable is invoked. The reason why LeaveCriticalSection is included outside the the if statement is because SleepConditionVariableCS will release the critical section before putting the thread to sleep, then reacquire the critical section when it awakes. I don’t like that behavior since its an unnecessary acquisition of the critical section and slows down the implementation.

There is a single allocation routine which performs a couple different rounds of error checking when calling calloc; first to check if the routine returned null, and second to see if it set a Windows error code that I could inspect from GetLastError. If either event is true, the application will terminate.

// ----------------------------------------------------------------------------

// Allocation utility functions

// ----------------------------------------------------------------------------

void* checkedCalloc(size_t count, size_t size) {

SetLastError(NO_ERROR);

void* result = calloc(count, size);

DWORD lastError = GetLastError();

if (result == NULL) {

fprintf(stdout, "Failed to allocate %d bytes. GetLastError() = %d.", size, lastError);

ExitProcess(EXIT_FAILURE);

}

if (result != NULL && lastError != NO_ERROR) {

fprintf(stdout, "Allocated %d bytes. GetLastError() = %d.", size, lastError);

ExitProcess(EXIT_FAILURE);

}

return result;

}

Now on to the core of the implementation. A series of structs are specified for those data that are shared (e.g., points, centroids, etc) among the threads, and those that are local to each thread (e.g., point boundaries, partial results).

// ----------------------------------------------------------------------------

// Parallel Implementation

// ----------------------------------------------------------------------------

struct LocalAssignData;

struct SharedAssignData {

Barrier barrier;

bool continueLoop;

int numPoints;

int pointDim;

int K;

double* points;

double* centroids;

int* labels;

int maxIter;

double change;

double pChange;

DWORD numProcessors;

DWORD numThreads;

LocalAssignData* local;

};

struct LocalAssignData {

SharedAssignData* shared;

int begin;

int end;

int* labelCount;

double* partialCentroids;

};

The assign method does exactly what was specified in the parallel algorithm section. It will iterate over the portion of points it is responsible for, compute their labels and its partial centroids (sum of points with label , division done at aggregate step.).

void assign(int* label, int begin, int end, int* labelCount, int K, double* points, int pointDim, double* centroids, double* partialCentroids) {

int* local = (int*)checkedCalloc(end - begin, sizeof(int));

int* localCount = (int*)checkedCalloc(K, sizeof(int));

double* localPartial = (double*)checkedCalloc(pointDim * K, sizeof(double));

// Process a chunk of the array.

for (int point = begin; point < end; ++point) {

double optDist = INFINITY;

int optCentroid = -1;

for (int centroid = 0; centroid < K; ++centroid) {

double dist = 0.0;

for (int dim = 0; dim < pointDim; ++dim) {

double d = points[point * pointDim + dim] - centroids[centroid * pointDim + dim];

dist += d * d;

}

if (dist < optDist) {

optDist = dist;

optCentroid = centroid;

}

}

local[point - begin] = optCentroid;

++localCount[optCentroid];

for (int dim = 0; dim < pointDim; ++dim)

localPartial[optCentroid * pointDim + dim] += points[point * pointDim + dim];

}

memcpy(&label[begin], local, sizeof(int) * (end - begin));

free(local);

memcpy(labelCount, localCount, sizeof(int) * K);

free(localCount);

memcpy(partialCentroids, localPartial, sizeof(double) * pointDim * K);

free(localPartial);

}

One thing that I experimented with that gave me better performance was allocating and using memory within the function instead of allocating the memory outside and using within the assign routine. This in particular was motivated after I read about false sharing where two separate threads writing to the same cache line cause coherence updates to cascade in the CPU causing overall performance to degrade. For labelCount and partialCentroids they’re reallocated since I was concerned about data locality and wanted the three arrays to be relatively in the same neighborhood of memory. Speaking of which, memory coalescing is used for the points array so that point dimensions are adjacent in memory to take advantage of caching. Overall, a series of cache friendly optimizations.

The aggregate routine follows similar set of enhancements. The core of the method is to compute the new centroid locations based on the partial sums and centroid assignment counts given by args->shared->local[t].partialCentroids and args->shared->local[t].labelCount[t]. Using these partial results all the routine to complete in time which assuming all of these parameters are significantly less than

, gives a constant time routine. Once the centroids have been updated, the change in their location is computed and used to determine convergence along with how many iterations have gone by. Here if more than 1,000 iterations have occurred or the relative change in position is less than some tolerance (0.1%) then the threads will terminate.

void aggregate(void * data) {

LocalAssignData* args = (LocalAssignData*)data;

int* assignmentCounts = (int*)checkedCalloc(args->shared->K, sizeof(int));

double* newCentroids = (double*)checkedCalloc(args->shared->K * args->shared->pointDim, sizeof(double));

// Compute the assignment counts from the work the threads did.

for (int t = 0; t < args->shared->numThreads; ++t)

for (int k = 0; k < args->shared->K; ++k)

assignmentCounts[k] += args->shared->local[t].labelCount[k];

// Compute the location of the new centroids based on the work that the

// threads did.

for (int t = 0; t < args->shared->numThreads; ++t)

for (int k = 0; k < args->shared->K; ++k)

for (int dim = 0; dim < args->shared->pointDim; ++dim)

newCentroids[k * args->shared->pointDim + dim] += args->shared->local[t].partialCentroids[k * args->shared->pointDim + dim];

for (int k = 0; k < args->shared->K; ++k)

for (int dim = 0; dim < args->shared->pointDim; ++dim)

newCentroids[k * args->shared->pointDim + dim] /= assignmentCounts[k];

// See by how much did the position of the centroids changed.

args->shared->change = 0.0;

for (int k = 0; k < args->shared->K; ++k)

for (int dim = 0; dim < args->shared->pointDim; ++dim) {

double d = args->shared->centroids[k * args->shared->pointDim + dim] - newCentroids[k * args->shared->pointDim + dim];

args->shared->change += d * d;

}

// Store the new centroid locations into the centroid output.

memcpy(args->shared->centroids, newCentroids, sizeof(double) * args->shared->pointDim * args->shared->K);

// Decide if the loop should continue or terminate. (max iterations

// exceeded, or relative change not exceeded.)

args->shared->continueLoop = args->shared->change > 0.001 * args->shared->pChange && --(args->shared->maxIter) > 0;

args->shared->pChange = args->shared->change;

free(assignmentCounts);

free(newCentroids);

}

Each individual thread follows the same specification as given in the parallel algorithm section, and follows the calling convention required by the Windows API.

DWORD WINAPI assignThread(LPVOID data) {

LocalAssignData* args = (LocalAssignData*)data;

while (args->shared->continueLoop) {

memset(args->labelCount, 0, sizeof(int) * args->shared->K);

// Assign points cluster labels

assign(args->shared->labels, args->begin, args->end, args->labelCount, args->shared->K, args->shared->points, args->shared->pointDim, args->shared->centroids, args->partialCentroids);

// Tell the last thread to enter here to aggreagate the data within a

// critical section

synchronizeBarrier(&(args->shared->barrier), aggregate, args);

};

return 0;

}

The parallel algorithm controller itself is fairly simple and is responsible for basic preparation, bookkeeping, and cleanup. The number of processors is used to determine the number of threads to launch. The calling thread will run one instance will the remaining instances will run on separate threads. The data is partitioned, then the threads are spawned using the

CreateThread routine. I wish there was a Windows API that would allow me to simultaneously create threads with a specified array of arguments because CreateThread will automatically start the thread as soon as it’s created. If lots of threads are being created, then the first will wait a long time before the last one gets around to reaching the barrier. Subsequent iterations of the synchronized loops will have better performance, but it would be nice to avoid that initial delay. After kicking off the threads, the main thread will run its own block of data, and once all threads terminate, the routine will close open handles and free allocated memory.

void kMeansFitParallel(double* points, int numPoints, int pointDim, int K, double* centroids) {

// Lookup and calculate all the threading related values.

SYSTEM_INFO systemInfo;

GetSystemInfo(&systemInfo);

DWORD numProcessors = systemInfo.dwNumberOfProcessors;

DWORD numThreads = numProcessors - 1;

DWORD pointsPerProcessor = numPoints / numProcessors;

// Prepare the shared arguments that will get passed to each thread.

SharedAssignData shared;

shared.numPoints = numPoints;

shared.pointDim = pointDim;

shared.K = K;

shared.points = points;

shared.continueLoop = true;

shared.maxIter = 1000;

shared.pChange = 0.0;

shared.change = 0.0;

shared.numThreads = numThreads;

shared.numProcessors = numProcessors;

initializeBarrier(&(shared.barrier), numProcessors);

shared.centroids = centroids;

for (int i = 0; i < K; ++i) {

int point = rand() % numPoints;

for (int dim = 0; dim < pointDim; ++dim)

shared.centroids[i * pointDim + dim] = points[point * pointDim + dim];

}

shared.labels = (int*)checkedCalloc(numPoints, sizeof(int));

// Create thread workload descriptors

LocalAssignData* local = (LocalAssignData*)checkedCalloc(numProcessors, sizeof(LocalAssignData));

for (int i = 0; i < numProcessors; ++i) {

local[i].shared = &shared;

local[i].begin = i * pointsPerProcessor;

local[i].end = min((i + 1) * pointsPerProcessor, numPoints);

local[i].labelCount = (int*)checkedCalloc(K, sizeof(int));

local[i].partialCentroids = (double*)checkedCalloc(K * pointDim, sizeof(double));

}

shared.local = local;

// Kick off the threads

HANDLE* threads = (HANDLE*)checkedCalloc(numThreads, sizeof(HANDLE));

for (int i = 0; i < numThreads; ++i)

threads[i] = CreateThread(0, 0, assignThread, &local[i + 1], 0, NULL);

// Do work on this thread so that it's just not sitting here idle while the

// other threads are doing work.

assignThread(&local[0]);

// Clean up

WaitForMultipleObjects(numThreads, threads, true, INFINITE);

for (int i = 0; i < numThreads; ++i)

CloseHandle(threads[i]);

free(threads);

for (int i = 0; i < numProcessors; ++i) {

free(local[i].labelCount);

free(local[i].partialCentroids);

}

free(local);

free(shared.labels);

deleteBarrier(&(shared.barrier));

}

C#

The CUDAfy.NET GPGPU C# implementation required a lot of experimentation to find an efficient solution.

In the GPGPU paradigm there is a host and a device in which sequential operations take place on the host (ie. managed C# code) and parallel operations on the device (ie. CUDA code). To delineate between the two, the [Cudafy] method attribute is used on the static public method assign. The set of host operations are all within the Fit routine.

Under the CUDA model, threads are bundled together into blocks, and blocks together into a grid. Here the data is partitioned so that each block consists of half the maximum number of threads possible per block and the total number of blocks is the number of points divided by that quantity. This was done through experimentation, and motivated by Thomas Bradley’s Advanced CUDA Optimization workshop notes [pdf] that suggest at that regime the memory lines become saturated and cannot yield better throughput. Each block runs on a Streaming Multiprocessor (a collection of CUDA cores) having shared memory that the threads within the block can use. These blocks are then executed in pipeline fashion on the available Streaming Multiprocessors to give the desired performance from the GPGPU.

What is nice about the shared memory is that it is much faster than the global memory of the GPGPU. (cf. Using Shared Memory in CUDA C/C++) To make use of this fact the threads will rely on two arrays in shared memory: sum of the points and the count of those belonging to each centroid. Once the arrays have been zeroed out by the threads, all of the threads will proceed to find the nearest centroid of the single point they are assigned to and then update those shared arrays using the appropriate atomic operations. Once all of the threads complete that assignment, the very first thread will then add the arrays in shared memory to those in the global memory using the appropriate atomic operations.

using Cudafy;

using Cudafy.Host;

using Cudafy.Translator;

using Cudafy.Atomics;

using System;

namespace CUDAfyTesting {

public class CUDAfyKMeans {

[Cudafy]

public static void assign(GThread thread, int[] constValues, double[] centroids, double[] points, float[] outputSums, int[] outputCounts) {

// Unpack the const value array

int pointDim = constValues[0];

int K = constValues[1];

int numPoints = constValues[2];

// Ensure that the point is within the boundaries of the points

// array.

int tId = thread.threadIdx.x;

int point = thread.blockIdx.x * thread.blockDim.x + tId;

if (point >= numPoints)

return;

// Use two shared arrays since they are much faster than global

// memory. The shared arrays will be scoped to the block that this

// thread belongs to.

// Accumulate the each point's dimension assigned to the k'th

// centroid. When K = 128 => pointDim = 2; when pointDim = 128

// => K = 2; Thus max(len(sharedSums)) = 256.

float[] sharedSums = thread.AllocateShared<float>("sums", 256);

if (tId < K * pointDim)

sharedSums[tId] = 0.0f;

// Keep track of how many times the k'th centroid has been assigned

// to a point. max(K) = 128

int[] sharedCounts = thread.AllocateShared<int>("counts", 128);

if (tId < K)

sharedCounts[tId] = 0;

// Make sure all threads share the same shared state before doing

// any calculations.

thread.SyncThreads();

// Find the optCentroid for point.

double optDist = double.PositiveInfinity;

int optCentroid = -1;

for (int centroid = 0; centroid < K; ++centroid) {

double dist = 0.0;

for (int dim = 0; dim < pointDim; ++dim) {

double d = centroids[centroid * pointDim + dim] - points[point * pointDim + dim];

dist += d * d;

}

if (dist < optDist) {

optDist = dist;

optCentroid = centroid;

}

}

// Add the point to the optCentroid sum

for (int dim = 0; dim < pointDim; ++dim)

// CUDA doesn't support double precision atomicAdd so cast down

// to float...

thread.atomicAdd(ref(sharedSums[optCentroid * pointDim + dim]), (float)points[point * pointDim + dim]);

// Increment the optCentroid count

thread.atomicAdd(ref(sharedCounts[optCentroid]), +1);

// Wait for all of the threads to complete populating the shared

// memory before storing the results back to global memory where

// the host can access the results.

thread.SyncThreads();

// Have to do a lock on both of these since some other Streaming

// Multiprocessor could be running and attempting to update the

// values at the same time.

// Copy the shared sums to the output sums

if (tId == 0)

for (int i = 0; i < K * pointDim; ++i)

thread.atomicAdd(ref(outputSums[i]), sharedSums[i]);

// Copy the shared counts to the output counts

if (tId == 0)

for (int i = 0; i < K; i++)

thread.atomicAdd(ref(outputCounts[i]), sharedCounts[i]);

}

Before going on to the Fit method, let’s look at what CUDAfy.NET is doing under the hood to convert the C# code to run on the CUDA-enabled GPGPU. Within the CUDAfy.Translator namespace there are a handful of classes for decompiling the application into an abstract syntax tree using ICharpCode.Decompiler and Mono.Cecil, then converting the AST over to CUDA C via visitor pattern, next compiling the resulting CUDA C using NVIDIA’s NVCC compiler, and finally the compilation result is relayed back to the caller if there’s a problem; otherwise, a CudafyModule instance is returned, and the compiled CUDA C code it represents loaded up on the GPGPU. (The classes and method calls of interest are: CudafyTranslator.DoCudafy, CudaLanguage.RunTransformsAndGenerateCode, CUDAAstBuilder.GenerateCode, CUDAOutputVisitor and CudafyModule.Compile.)

private CudafyModule cudafyModule;

private GPGPU gpgpu;

private GPGPUProperties properties;

public int PointDim { get; private set; }

public double[] Centroids { get; private set; }

public CUDAfyKMeans() {

cudafyModule = CudafyTranslator.Cudafy();

gpgpu = CudafyHost.GetDevice(CudafyModes.Target, CudafyModes.DeviceId);

properties = gpgpu.GetDeviceProperties(true);

gpgpu.LoadModule(cudafyModule);

}

The Fit method follows the same paradigm that I presented earlier with the C++ code. The main difference here is the copying of managed .NET resources (arrays) over to the device. I found these operations to be relatively time intensive and I did find some suggestions from the CUDAfy.NET website on how to use pinned memory- essentially copy the managed memory to unmanaged memory, then do an asynchronous transfer from the host to the device. I tried this with the points arrays since its the largest resource, but did not see noticeable gains so I left it as is.

At the beginning of each iteration of the main loop, the device counts and sums are cleared out through the Set method, then the CUDA code is invoked using the Launch routine with the specified block and grid dimensions and device pointers. One thing that the API does is return an array when you allocate or copy memory over to the device. Personally, an IntPtr seems more appropriate. Execution of the routine is very quick, where on some of my tests it took 1 to 4 ms to process 100,000 two dimensional points. Once the routine returns, memory from the device (sum and counts) is copied back over to the host which then does a quick operation to derive the new centroid locations and copy that memory over to the device for the next iteration.

public void Fit(double[] points, int pointDim, int K) {

if (K <= 0)

throw new ArgumentOutOfRangeException("K", "Must be greater than zero.");

if (pointDim <= 0)

throw new ArgumentOutOfRangeException("pointDim", "Must be greater than zero.");

if (points.Length < pointDim)

throw new ArgumentOutOfRangeException("points", "Must have atleast pointDim entries.");

if (points.Length % pointDim != 0)

throw new ArgumentException("points.Length must be n * pointDim > 0.");

int numPoints = points.Length / pointDim;

// Figure out the partitioning of the data.

int threadsPerBlock = properties.MaxThreadsPerBlock / 2;

int numBlocks = (numPoints / threadsPerBlock) + (numPoints % threadsPerBlock > 0 ? 1 : 0);

dim3 blockSize = new dim3(threadsPerBlock, 1, 1);

dim3 gridSize = new dim3(

Math.Min(properties.MaxGridSize.x, numBlocks),

Math.Min(properties.MaxGridSize.y, (numBlocks / properties.MaxGridSize.x) + (numBlocks % properties.MaxGridSize.x > 0 ? 1 : 0)),

1

);

int[] constValues = new int[] { pointDim, K, numPoints };

float[] assignmentSums = new float[pointDim * K];

int[] assignmentCount = new int[K];

// Initial centroid locations picked at random

Random prng = new Random();

double[] centroids = new double[K * pointDim];

for (int centroid = 0; centroid < K; centroid++) {

int point = prng.Next(points.Length / pointDim);

for (int dim = 0; dim < pointDim; dim++)

centroids[centroid * pointDim + dim] = points[point * pointDim + dim];

}

// These arrays are only read from on the GPU- they are never written

// on the GPU.

int[] deviceConstValues = gpgpu.CopyToDevice<int>(constValues);

double[] deviceCentroids = gpgpu.CopyToDevice<double>(centroids);

double[] devicePoints = gpgpu.CopyToDevice<double>(points);

// These arrays are written written to on the GPU.

float[] deviceSums = gpgpu.CopyToDevice<float>(assignmentSums);

int[] deviceCount = gpgpu.CopyToDevice<int>(assignmentCount);

// Set up main loop so that no more than maxIter iterations take

// place, and that a realative change less than 1% in centroid

// positions will terminate the loop.

int maxIter = 1000;

double change = 0.0, pChange = 0.0;

do {

pChange = change;

// Clear out the assignments, and assignment counts on the GPU.

gpgpu.Set(deviceSums);

gpgpu.Set(deviceCount);

// Lauch the GPU portion

gpgpu.Launch(gridSize, blockSize, "assign", deviceConstValues, deviceCentroids, devicePoints, deviceSums, deviceCount);

// Copy the results memory from the GPU over to the CPU.

gpgpu.CopyFromDevice<float>(deviceSums, assignmentSums);

gpgpu.CopyFromDevice<int>(deviceCount, assignmentCount);

// Compute the new centroid locations.

double[] newCentroids = new double[centroids.Length];

for (int centroid = 0; centroid < K; ++centroid)

for (int dim = 0; dim < pointDim; ++dim)

newCentroids[centroid * pointDim + dim] = assignmentSums[centroid * pointDim + dim] / assignmentCount[centroid];

// Calculate how much the centroids have changed to decide

// whether or not to terminate the loop.

change = 0.0;

for (int centroid = 0; centroid < K; ++centroid)

for (int dim = 0; dim < pointDim; ++dim) {

double d = newCentroids[centroid * pointDim + dim] - centroids[centroid * pointDim + dim];

change += d * d;

}

// Update centroid locations on CPU & GPU

Array.Copy(newCentroids, centroids, newCentroids.Length);

deviceCentroids = gpgpu.CopyToDevice<double>(centroids);

} while (change > 0.01 * pChange && --maxIter > 0);

gpgpu.FreeAll();

this.Centroids = centroids;

this.PointDim = pointDim;

}

}

}

Python

I include the Python implementation for the sake of demonstrating how scikit-learn was invoked throughout the following experiments section.

model = KMeans(

n_clusters = numClusters,

init='random',

n_init = 1,

max_iter = 1000,

tol = 1e-3,

precompute_distances = False,

verbose = 0,

copy_x = False,

n_jobs = numThreads

);

model.fit(X); // X = (numPoints, pointDim) numpy array.

Experimental Setup

All experiments where conducted on a laptop with an Intel Core i7-2630QM Processor and NVIDIA GeForce GT 525M GPGPU running Windows 7 Home Premium. C++ and C# implementations were developed and compiled by Microsoft Visual Studio Express 2013 for Desktop targeting C# .NET Framework 4.5 (Release, Mixed Platforms) and C++ (Release, Win32). Python implementation was developed and compiled using Eclipse Luna 4.4.1 targeting Python 2.7, scikit-learn 0.16.0, and numpy 1.9.1. All compilers use default arguments and no extra optimization flags.

For each test, each reported test point is the median of thirty sample run times of a given algorithm and set of arguments. Run time is computed as the (wall) time taken to execute model.fit(points, pointDim, numClusters) where time is measured by: QueryPerformanceCounter in C++, System.Diagnostics.Stopwatch in C#, and time.clock in Python. Every test is based on a dataset having two natural clusters at .25 or -.25 in each dimension.

Results

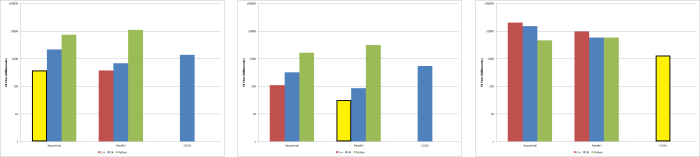

Varying point quantity

|

Both the C++ and C# sequential and parallel implementations outperform the Python scikit-learn implementations. However, the C++ sequential and parallel implementations outperforms their C# counterparts. Though the C++ sequential and parallel implementations are tied, as it seems the overhead associated with multithreading overrides any multithreaded performance gains one would expect. The C# CUDAfy.NET implementation surprisingly does not outperform the C# parallel implementation, but does outperform the C# sequential one as the number of points to cluster increases.

So what’s the deal with Python scikit-learn? Why is the parallel version so slow? Well, it turns out I misunderstood the nJobs parameter. I interpreted this to mean that process of clustering a single set of points would be done in parallel; however, it actually means that the number of simultaneous runs of the whole process will occur in parallel. I was tipped off to this when I noticed multiple python.exe fork processes being spun off which surprised me that someone would implement a parallel routine that way leading to a more thorough reading the scikit-learn documentation. There is parallelism going on with scikit-learn, just not the desired type. Taking that into account the linear one performs reasonably well for being a dynamically typed interpreted language.

Varying point dimension

|

The C++ and C# parallel implementations exhibit consistent improved run time over their sequential counterparts. In all cases the performance is better than scikit-learn’s. Surprisingly, the C# CUDAfy.NET implementation does worse than both the C# sequential and parallel implementations. Why do we not better CUDAfy.NET performance? The performance we see is identical to the vary point quantity test. So on one hand it’s nice that increasing the point dimensions did not dramatically increase the run time, but ideally, the CUDAfy.NET performance should be better than the sequential and parallel C# variants for this test. My leading theory is that higher point dimensions result in more data that must be transferred between host and device which is a relatively slow process. Since I’m short on time, this will have to be something I investigate in more detail in the future.

Varying cluster quantity

|

As in the point dimension test, the C++ and C# parallel implementations outperform their sequential counterparts, while the scikit-learn implementation starts to show some competitive performance. The exciting news of course is that varying the cluster size finally reveals improved C# CUDAfy.NET run time. Now there is some curious behavior at the beginning of each plot. We get performance for two clusters, then jump up into about

for four to eight clusters. Number of points and their dimension are held constant, but we allocate a few extra double’s for the cluster centroids. I believe this has to do with cache behavior. I’m assuming for fewer than four clusters everything that’s needed sits nicely in the fast L1 cache, and moving up to four and more clusters requires more exchanging of data between L1, L2, L3, and (slower) memory memory to the different cores of the Intel Core i7-2630QM processor I’m using. As before, I’ll need to do some more tests to verify that this is what is truly happening.

Language comparison

For the three tests considered, the C++ implementations gave the best run time performance on point quantity and point dimension tests while the C# CUDAfy.NET implementation gave the best performance on the cluster quantity test.

The C++ implementation could be made to run faster be preallocating memory in the same fashion that C# does. In C# when an application is first created a block of memory is allocated for the managed heap. As a result, allocation of reference types in C# is done by incrementing a pointer instead of doing an unmanaged allocation (malloc, etc.). (cf. Automatic Memory Management) This allocation takes place before executing the C# routines, while the same allocation takes place during the C++ routines. Hence, the C++ run times will have an overhead not present in the C# run times. Had I implemented memory allocation in C++ the same as it’s done in C#, then the C++ implementation would be undoubtedly even faster than the C# ones.

While using scikit-learn in Python is convenient for exploratory data analysis and prototyping machine learning algorithms, it leaves much to be desired in performance; frequently coming ten times slower than the other two implementations on the varying point quantity and dimension tests, but within tolerance on the vary cluster quantity tests.

Future Work

The algorithmic approach here was to parallelize work on data points, but as the dimension of each point increases, it may make sense to explore algorithms that parallelize work across dimensions instead of points.

I’d like to spend more time figuring out some of the high-performance nuances of programming the GPGPU (as well as traditional C++), which take more time and patience than a week or two I spent on this. In addition, I’d like to dig a little deeper into doing CUDA C directly rather than through the convenient CUDAfy.NET wrapper; as well as explore OpenMP and OpenCL to see how they compare from a development and performance-oriented view to CUDA.

Python and scikit-learn were used a baseline here, but it would be worth spending extra time to see how R and Julia compare, especially the latter since Julia pitches itself as a high-performance solution, and is used for exploratory data analysis and prototyping machine learning systems.

While the emphasis here was on trying out CUDAfy.NET and getting some exposure to GPGPU programming, I’d like to apply CUDAfy.NET to the expectation maximization algorithm for fitting multivariate Gaussian mixture models to a dataset. GMMs are a natural extension of k-means clustering, and it will be good to implement the more involved EM algorithm.

Conclusions

Through this exercise, we can expect to see modest speedups over sequential implementations of about 2.62x and 11.69x in the C# parallel and GPGPU implementations respectively when attempting to find large numbers of clusters on low dimensional data. Fortunately the way you use k-means clustering is to find the cluster quantity that maximizes the Bayesian information criterion or Akaike information criterion which means running the vary centroid quantity test on real data. On the other hand, most machine learning data is of a high dimension so further testing (on a real data set) would be needed to verify it’s effectiveness in a production environment. Nonetheless, we’ve seen how parallel and GPGPU based approaches can reduce the time it takes to complete the clustering task, and learned some things along the way that can be applied to future work.

Bibliography

[LiFa89] Li Xiaobo and Fang Zhixi, “Parallel clustering algorithms”, Parallel Computing, 1989, 11(3): pp.275-290.

[MaMi09] Mario Zechner, Michael Granitzer. “Accelerating K-Means on the Graphics Processor via CUDA.” First International Conference on Intensive Applications and Services, INTENSIVE’09. pp. 7-15, 2009.

[Stu82] Stuart P. Lloyd. Least Squares Quantization in PCM. IEEE Transactions on Information Theory, 28:129-137, 1982.

Abstract Algebra in C#

Motivation

In C++ it is easy to define arbitrary template methods for computations involving primitive numeric types because all types inherently have arithmetic operations defined. Thus, a programmer need only implement one method for all numeric types. The compiler will infer the use and substitute the type at compile time and emit the appropriate machine instructions. This is C++’s approach to parametric polymorphism.

With the release of C# 2.0 in the fall of 2005, the language finally got a taste of parametric polymorphism in the form of generics. Unfortunately, types in C# do not inherently have arithmetic operations defined, so methods involving computations must use ad-hoc polymorphism to achieve the same result as in C++. The consequence is a greater bloat in code and an increased maintenance liability.

To get around this design limitation, I’ve decided to leverage C#’s approach to subtype polymorphism and to draw from Abstract Algebra to implement a collection of interfaces allowing for C++ like template functionality in C#. The following is an overview of the mathematical theory used to support and guide the design of my solution. In addition, I will present example problems from mathematics and computer science that can be represented in this solution along with examples how type agnostic computations that can be performed using this solution.

Abstract Algebra

Abstract Algebra is focused on how different algebraic structures behave in the presence of different axioms, operations and sets. In the following three sections, I will go over the fundamental sub-fields and how they are represented under the solution.

In all three sections, I will represent the distinction between algebraic structures using C# interfaces. The type parameters on these interfaces represent the sets being acted upon by each algebraic structure. This convention is consistent with intuitionistic (i.e., Chruch-style) type theory embraced by C#’s Common Type System (CTS). Use of parameter constraints will be used when type parameters are intended to be of a specific type. Functions on the set and elements of the set will be represented by methods and properties respectively.

Group Theory

Group Theory is the simplest of sub-fields of Abstract Algebra dealing with the study of a single binary operation, , acting on a set

. There are five axioms used to describe the structures studied under Group Theory:

- Closure:

- Associativity:

- Commutativity :

- Identity:

- Inverse:

The simplest of these structures is the Groupoid satisfying only axiom (1). Any Groupoid also satisfying axiom (2) is known as a Semi-group. Any Semi-group satisfying axiom (4) is a Monoid. Monoid’s also satisfying axiom (5) are known as Groups. Any Group satisfying axiom (3) is an Abelian Group.

public interface IGroupoid<T> {

T Operation(T a, T b);

}

public interface ISemigroup<T> : IGroupoid<T> {

}

public interface IMonoid<T> : ISemigroup<T> {

T Identity { get; }

}

public interface IGroup<T> : IMonoid<T> {

T Inverse(T t);

}

public interface IAbelianGroup<T> : IGroup<T> {

}

Ring Theory

The next logical sub-field of Abstract Algebra to study is Ring Theory which is the study of two operations, and

, on a single set. In addition to the axioms outlined above, there is an addition axiom for describing how one operations distributes over the other.

- Distributivity:

All of the following ring structures satisfy axiom (6). Rings are distinguished by the properties of their operands. The simplest of these structures is the Ringoid where both operands are given by Groupoids. Any Ringoid whose operands are Semi-groups is a Semi-ring. Any Semi-ring whose first operand is a Group is a Ring. Any Ring whose second operand is a Monoid is a Ring with Unity. Any Ring with Unity whose second operand is a Group is Division Ring. Any Division Ring whose operands are both Abelian Groups is a Field.

public interface IRingoid<T, A, M>

where A : IGroupoid<T>

where M : IGroupoid<T> {

A Addition { get; }

M Multiplication { get; }

T Distribute(T a, T b);

}

public interface ISemiring<T, A, M> : IRingoid<T, A, M>

where A : ISemigroup<T>

where M : ISemigroup<T> {

}

public interface IRing<T, A, M> : ISemiring<T, A, M>

where A : IGroup<T>

where M : ISemigroup<T> {

}

public interface IRingWithUnity<T, A, M> : IRing<T, A, M>

where A : IGroup<T>

where M : IMonoid<T> {

}

public interface IDivisionRing<T, A, M> : IRingWithUnity<T, A, M>

where A : IGroup<T>

where M : IGroup<T> {

}

public interface IField<T, A, M> : IDivisionRing<T, A, M>

where A : IAbelianGroup<T>

where M : IAbelianGroup<T> {

}

Module Theory

The last, and more familiar, sub-field of Abstract Algebra is Module Theory which deals with structures with an operation, , over two separate sets:

and

that satisfy the following axioms.

- Distributivity of

:

- Distributivity of

:

- Associativity of

:

All of the following module structures satisfy axioms (7)-(9). A Module consists of a scalar Ring and an vector Abelian Group. Any Module whose Ring is a Ring with Unity is a Unitary Module. Any Unitary Module whose Ring with Unity is a Abelian Group is a Vector Space.

public interface IModule<

TScalar,

TVector,

TScalarRing,

TScalarAddativeGroup,

TScalarMultiplicativeSemigroup,

TVectorAddativeAbelianGroup

>

where TScalarRing : IRing<TScalar, TScalarAddativeGroup, TScalarMultiplicativeSemigroup>

where TScalarAddativeGroup : IGroup<TScalar>

where TScalarMultiplicativeSemigroup : ISemigroup<TScalar>

where TVectorAddativeAbelianGroup : IAbelianGroup<TVector>

{

TScalarRing Scalar { get; }

TVectorAddativeAbelianGroup Vector { get; }

TVector Distribute(TScalar t, TVector r);

}

public interface IUnitaryModule<

TScalar,

TVector,

TScalarRingWithUnity,

TScalarAddativeGroup,

TScalarMultiplicativeMonoid,

TVectorAddativeAbelianGroup

>

: IModule<

TScalar,

TVector,

TScalarRingWithUnity,

TScalarAddativeGroup,

TScalarMultiplicativeMonoid,

TVectorAddativeAbelianGroup

>

where TScalarRingWithUnity : IRingWithUnity<TScalar, TScalarAddativeGroup, TScalarMultiplicativeMonoid>

where TScalarAddativeGroup : IGroup<TScalar>

where TScalarMultiplicativeMonoid : IMonoid<TScalar>

where TVectorAddativeAbelianGroup : IAbelianGroup<TVector>

{

}

public interface IVectorSpace<

TScalar,

TVector,

TScalarField,

TScalarAddativeAbelianGroup,

TScalarMultiplicativeAbelianGroup,

TVectorAddativeAbelianGroup

>

: IUnitaryModule<

TScalar,

TVector,

TScalarField,

TScalarAddativeAbelianGroup,

TScalarMultiplicativeAbelianGroup,

TVectorAddativeAbelianGroup

>

where TScalarField : IField<TScalar, TScalarAddativeAbelianGroup, TScalarMultiplicativeAbelianGroup>

where TScalarAddativeAbelianGroup : IAbelianGroup<TScalar>

where TScalarMultiplicativeAbelianGroup : IAbelianGroup<TScalar>

where TVectorAddativeAbelianGroup : IAbelianGroup<TVector>

{

}

Representation of Value Types

The CTS allows for both value and reference types on the .NET Common Language Infrastructure (CLI). The following are examples of how each theory presented above can leverage value types found in the C# language to represent concepts drawn from mathematics.

Enum Value Types and the Dihedral Group

One of the simplest finite groups is the Dihedral Group of order eight, , representing the different orientations of a square,

, obtained by reflecting the square about the vertical axis,

, and rotating the square by ninety degrees,

. The generating set is given by

and gives rise to the set

These elements are assigned names as follows:

,

,

,

,

,

,

and

. The relationship between these elements is visualized below:

The easiest way to represent this group as a value type is with an enum.

enum Symmetry { Rot000, Rot090, Rot180, Rot270, RefVer, RefDes, RefHoz, RefAsc }

From this enum we can define the basic Group Theory algebraic structures to take us to .

public class SymmetryGroupoid : IGroupoid<Symmetry> {

public Symmetry Operation(Symmetry a, Symmetry b) {

// 64 cases

}

}

public class SymmetrySemigroup : SymmetryGroupoid, ISemigroup<Symmetry> {

}

public class SymmetryMonoid : SymmetrySemigroup, IMonoid<Symmetry> {

public Symmetry Identity {

get { return Symmetry.Rot000; }

}

}

public class SymmetryGroup : SymmetryMonoid, IGroup<Symmetry> {

public Symmetry Inverse(Symmetry a) {

switch (a) {

case Symmetry.Rot000:

return Symmetry.Rot000;

case Symmetry.Rot090:

return Symmetry.Rot270;

case Symmetry.Rot180:

return Symmetry.Rot270;

case Symmetry.Rot270:

return Symmetry.Rot090;

case Symmetry.RefVer:

return Symmetry.RefVer;

case Symmetry.RefDes:

return Symmetry.RefAsc;

case Symmetry.RefHoz:

return Symmetry.RefHoz;

case Symmetry.RefAsc:

return Symmetry.RefDes;

}

throw new NotImplementedException();

}

}

Integral Value Types and the Commutative Ring with Unity over

C# exposes a number of fixed bit integral value types that allow a programmer to pick an integral value type suitable for the scenario at hand. Operations over these integral value types form a commutative ring with unity whose set is the congruence class where

is the number of bits used to represent the integer and

is the equivalance class

with

.

Addition is given by just as multiplication is given by

. Both statements are equivalent to the following congruence statements:

and

respectively.

Under the binary numeral system, modulo is equivalent to ignoring the bits exceeding

, or equivalently,

where

. As a result only the first (right most) bits need to be considered when computing the sum or product of two congruence classes, or in this case, integer values in C#. Thus, in the following implementation, it is not necessary to write any extra code to represent these operations other than writing them in their native form.

The reason why we are limited to a commutative ring with unity instead of a full field is that multiplicative inverses do not exist for all elements. A multiplicative inverse only exists when where

is the multiplicative inverse of

. For a solution to exist,

. Immediately, any even value of

will not have a multiplicative inverse in

. However, all odd numbers will.

public class AddativeIntegerGroupoid : IGroupoid<long> {

public long Operation(long a, long b) {

return a + b;

}

}

public class AddativeIntegerSemigroup : AddativeIntegerGroupoid, ISemigroup<long> {

}

public class AddativeIntegerMonoid : AddativeIntegerSemigroup, IMonoid<long> {

public long Identity {

get { return 0L; }

}

}

public class AddativeIntegerGroup : AddativeIntegerMonoid, IGroup<long> {

public long Inverse(long a) {

return -a;

}

}

public class AddativeIntegerAbelianGroup : AddativeIntegerGroup, IAbelianGroup<long> {

}

public class MultiplicativeIntegerGroupoid : IGroupoid<long> {

public long Operation(long a, long b) {

return a * b;

}

}

public class MultiplicativeIntegerSemigroup : MultiplicativeIntegerGroupoid, ISemigroup<long> {

}

public class MultiplicativeIntegerMonoid : MultiplicativeIntegerSemigroup, IMonoid<long> {

public long Identity {

get { return 1L; }

}

}

public class IntegerRingoid : IRingoid<long, AddativeIntegerGroupoid, MultiplicativeIntegerGroupoid> {

public AddativeIntegerGroupoid Addition { get; private set;}

public MultiplicativeIntegerGroupoid Multiplication { get; private set;}

public IntegerRingoid() {

Addition = new AddativeIntegerGroupoid();

Multiplication = new MultiplicativeIntegerGroupoid();

}

public long Distribute(long a, long b) {

return Multiplication.Operation(a, b);

}

}

public class IntegerSemiring : IntegerRingoid, ISemiring<long, AddativeIntegerSemiring, MultiplicativeIntegerSemiring> {

public AddativeIntegerSemiring Addition { get; private set;}

public MultiplicativeIntegerSemiring Multiplication { get; private set;}

public IntegerSemiring() : base() {

Addition = new AddativeIntegerSemiring();

Multiplication = new MultiplicativeIntegerSemiring();

}

}

public class IntegerRing : IntegerSemiring, IRing<long, AddativeIntegerGroup, MultiplicativeIntegerSemigroup>{

public new AddativeIntegerGroup Addition { get; private set; }

public IntegerRing() : base() {

Addition = new AddativeIntegerGroup();

}

}

public class IntegerRingWithUnity : IntegerRing, IRingWithUnity<long, AddativeIntegerGroup, MultiplicativeIntegerMonoid> {

public MultiplicativeIntegerMonoid Multiplication { get; private set; }

public IntegerRingWithUnity() : base() {

Multiplication = new MultiplicativeIntegerMonoid();

}

}

Floating-point Value Types and the Real Vector Space

C# offers three types that approximate the set of Reals: floats, doubles and decimals. Floats are the least representative followed by doubles and decimals. These types are obviously not continuous, but the error involved in rounding calculations with respect to the calculations in question are negligible and for most intensive purposes can be treated as continuous.

As in the previous discussion on the integers, additive and multiplicative classes are defined over the algebraic structures defined in the Group and Ring Theory sections presented above. In addition to these implementations, an additional class is defined to describe a vector.

public class Vector<T> {

private T[] vector;

public int Dimension {

get { return vector.Length; }

}

public T this[int n] {

get { return vector[n]; }

set { vector[n] = value; }

}

public Vector() {

vector = new T[2];

}

}

With these classes, it is now possible to implement the algebraic structures presented in the Module Theory section from above.

public class RealVectorModule : IModule<double, Vector<double>, RealRing, AddativeRealGroup, MultiplicativeRealSemigroup, VectorAbelianGroup<double>> {

public RealRing Scalar {

get;

private set;

}

public VectorAbelianGroup<double> Vector {

get;

private set;

}

public RealVectorModule() {

Scalar = new RealRing();

Vector = new VectorAbelianGroup<double>(new AddativeRealAbelianGroup());

}

public Vector<double> Distribute(double t, Vector<double> r) {

Vector<double> c = new Vector<double>();

for (int i = 0; i < c.Dimension; i++)

c[i] = Scalar.Multiplication.Operation(t, r[i]);

return c;

}

}

public class RealVectorUnitaryModule : RealVectorModule, IUnitaryModule<double, Vector<double>, RealRingWithUnity, AddativeRealGroup, MultiplicativeRealMonoid, VectorAbelianGroup<double>> {

public new RealRingWithUnity Scalar {

get;

private set;

}

public RealVectorUnitaryModule()

: base() {

Scalar = new RealRingWithUnity();

}

}